Hi Folks,

Yesterday I was working with Azure DevOps repository for my project. My local Develop branch is behind the Main branch by few commits, I want to get the latest commits into my local and include those from the Main branch also in to my Develop branch.

It is then I got to know whether to use GIT Merge Command or GIT Rebase Command. And here you go my findings on the same….

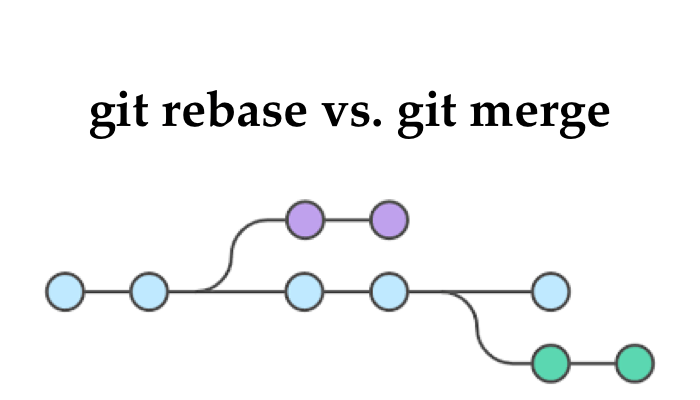

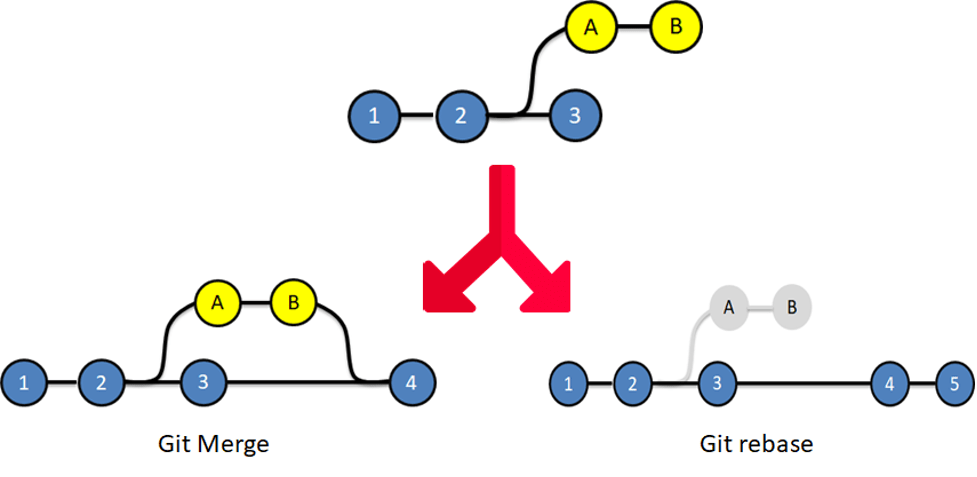

I got to know the details, official Git manual states that rebase “reapplies commits on top of another base branch”, whereas merge “joins two or more development histories together”. In other words, the key difference between merge and rebase is that while merge preserves history as it happened, rebase rewrites it. Let’s start with an illustration…for better understanding…followed by the differences…

| Merge | Rebase |

| Git merge is a command that allows you to merge branches from Git. | Git rebase is a command that allows developers to integrate changes from one branch to another. |

| In Git Merge logs will be showing the complete history of the merging of commits. | Logs are linear in Git rebase as the commits are rebased |

| All the commits on the feature branch will be combined as a single commit in the master branch. | All the commits will be rebased and the same number of commits will be added to the master branch. |

| Git Merge is used when the target branch is shared branch | Git Rebase should be used when the target branch is private branch |

Actually talking both do mean the same purpose and have their own uses, but at the end of the day it is completely up to your ALM strategy/methodology. I preferred to use Merge instead of Rebase just that my branch is shared branch and for simplicity.

Hope this helps…

Cheers,

PMDY