While many people doesn’t know the significance of MIME Type, this post is to give brief knowledge about the same before moving to understand security concepts in Power Platform in my upcoming articles.

In the Microsoft Power Platform, MIME types (Multipurpose Internet Mail Extensions) are standardized labels used to identify the format of data files. They are critical for ensuring that applications like Power Apps, Power Automate, and Power Pages can correctly process, display, or transmit files.

Core Functions in Power Platform

- Dataverse Storage: Tables such as

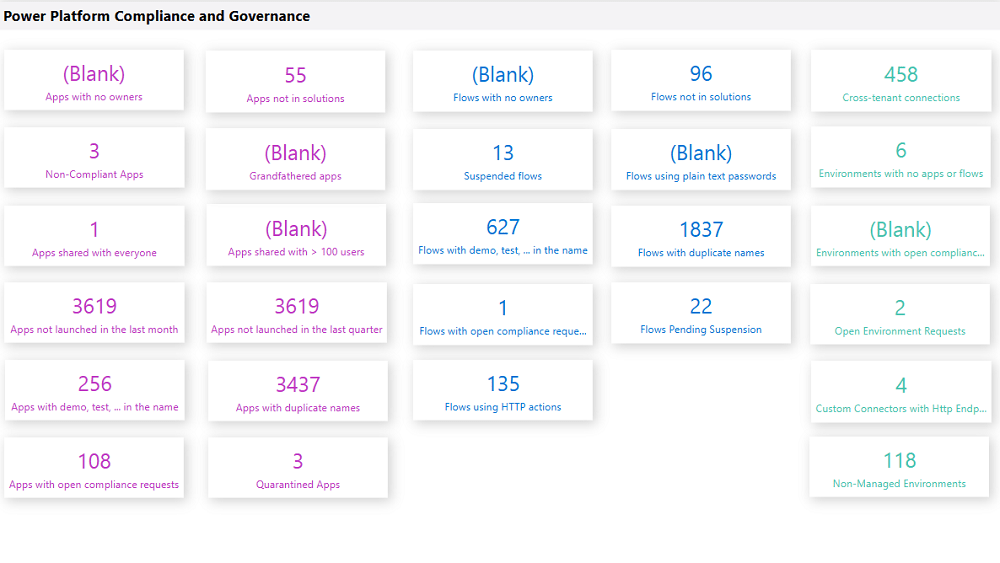

ActivityMimeAttachmentandAnnotation(Notes) use a dedicated MimeType column to store the format of attached files alongside their Base64-encoded content. - Security & Governance: Administrators can use the Power Platform Admin Center to block specific “dangerous” MIME types (e.g., executables) from being uploaded as attachments to protect the environment.

- Power Automate Approvals: You can configure approval flows to fail if they contain blocked file types, providing an extra layer of security for email notifications.

- Power Pages (Web Templates): When creating custom web templates, the MIME type field controls how the server responds to a browser. For example, templates generating JSON must be set to

application/jsonto be parsed correctly. - Email Operations: When using connectors like Office 365 Outlook, you must specify the MIME type for attachments (e.g.,

application/pdffor PDFs) so the recipient’s client can open them properly.

Common MIME Types Used

| File Extension | MIME Type |

|---|---|

application/pdf | |

| .docx | application/vnd.openxmlformats-officedocument.wordprocessingml.document |

| .xlsx | application/vnd.openxmlformats-officedocument.spreadsheetml.sheet |

| .png / .jpg | image/png / image/jpeg |

| .json | application/json |

| Unknown | application/octet-stream (used for generic binary files) |

Implementing MIME type handling and file restrictions ensures your Power Platform solutions are both functional and secure.

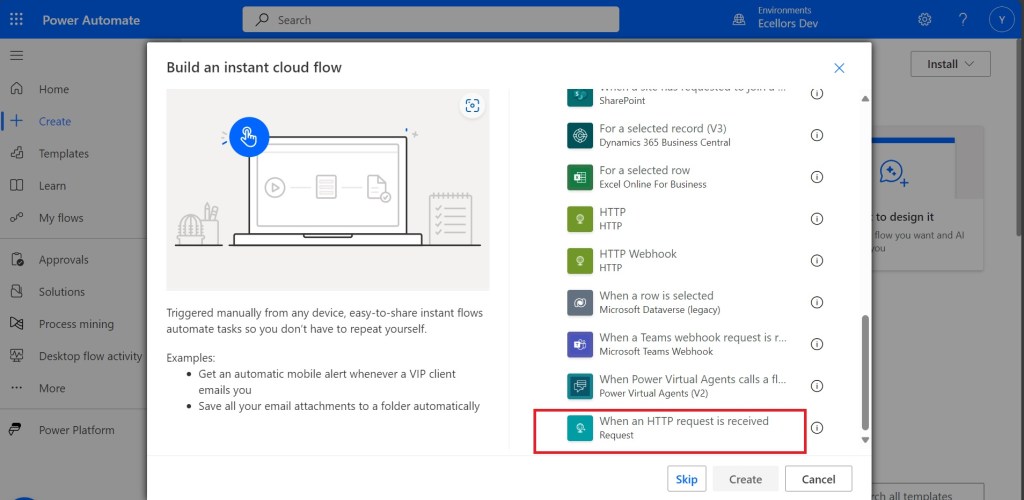

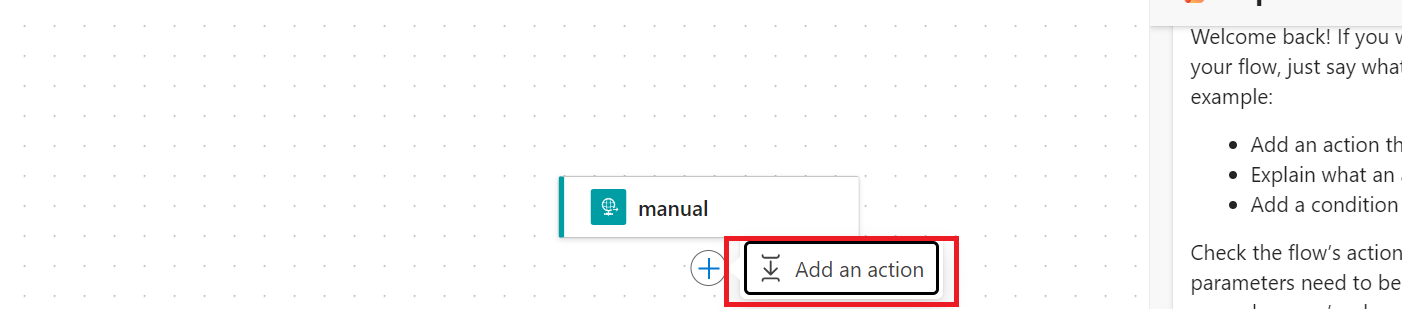

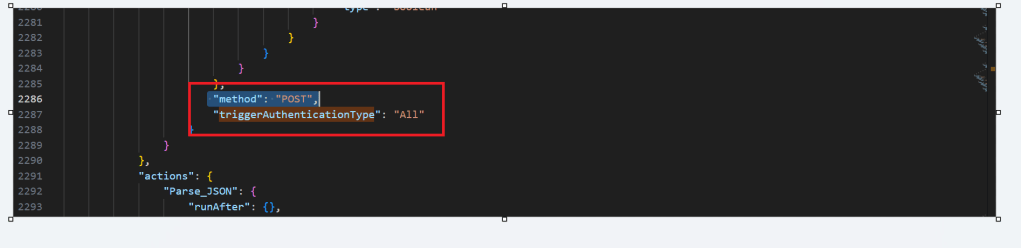

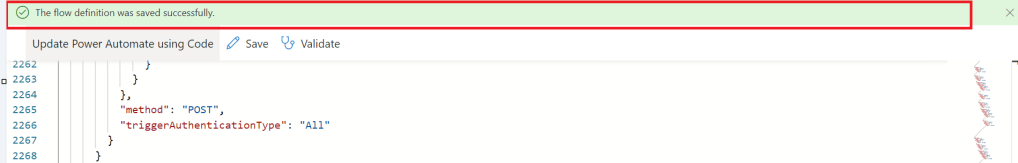

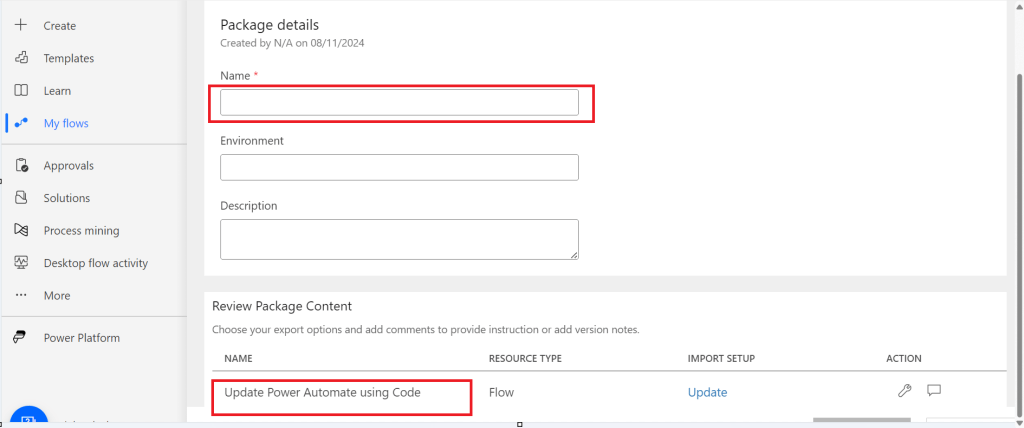

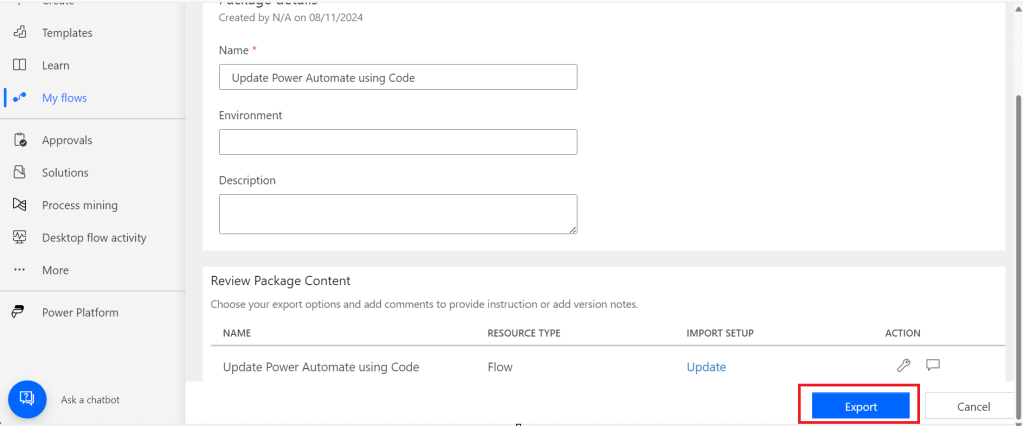

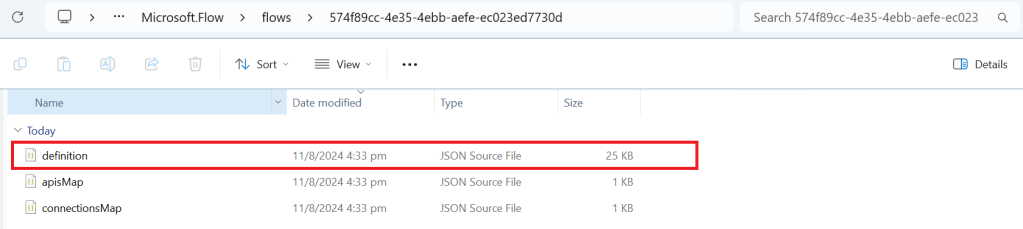

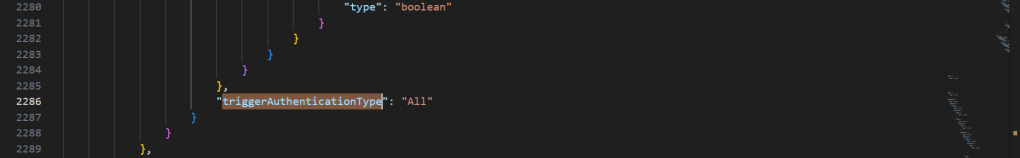

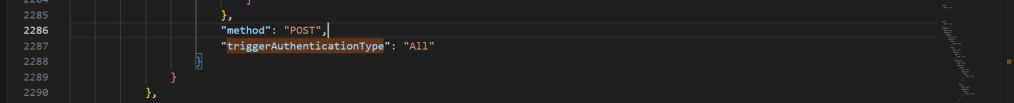

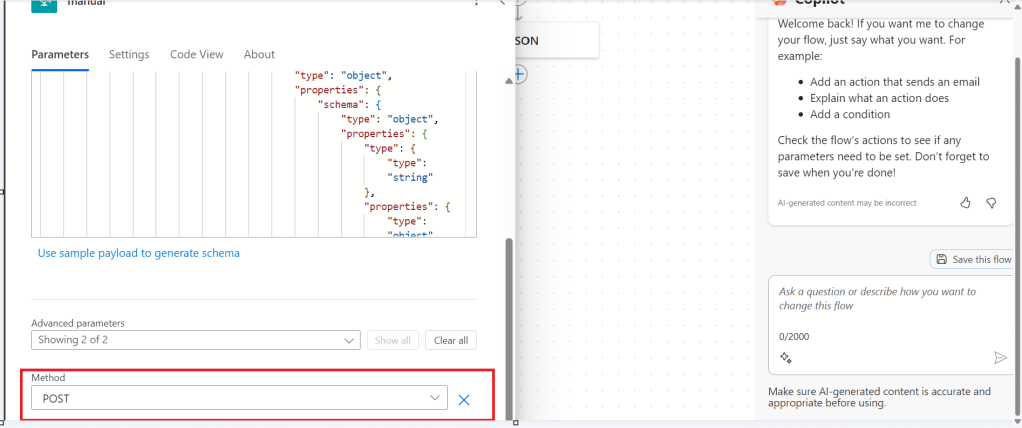

1. Programmatically Setting MIME Types in Power Automate

When working with file content in Power Automate, you often need to define the MIME type within a JSON object so connectors (like Outlook or HTTP) understand how to process the data.

- Structure: Use a Compose action to build a file object with the

$content-type(MIME type) and$content(Base64 data).json - Dynamic Mapping: If you don’t know the file type in advance, you can use an expression to map extensions to MIME types or use connectors like Cloudmersive to automatically detect document type information.

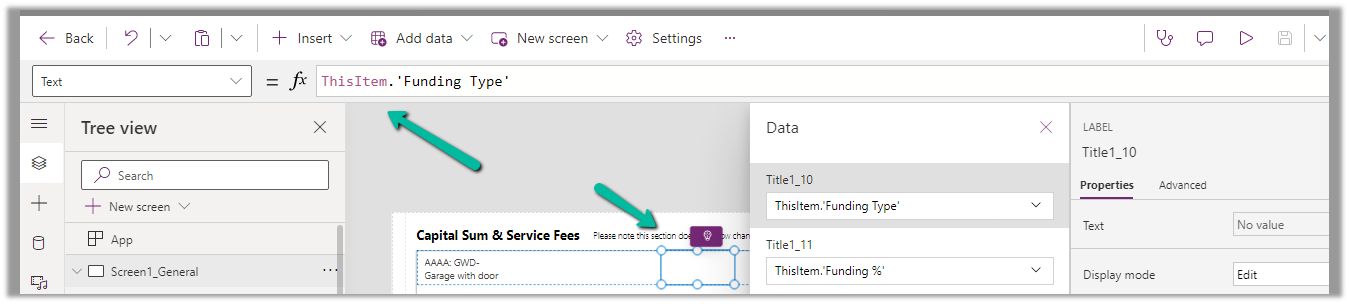

2. Restricting File Types in Power Apps

The Attachment control in Power Apps does not have a built-in “allowed types” property, so you must use Power Fx formulas to validate files after they are added.

- Validation on Add: Use the OnAddFile property of the attachment control to check the extension and notify the user if it’s invalid in PowerApps

- Submit Button Logic: For added security, set the DisplayMode of your Submit button to

Disabledif any attachment in the list doesn’t match your criteria.

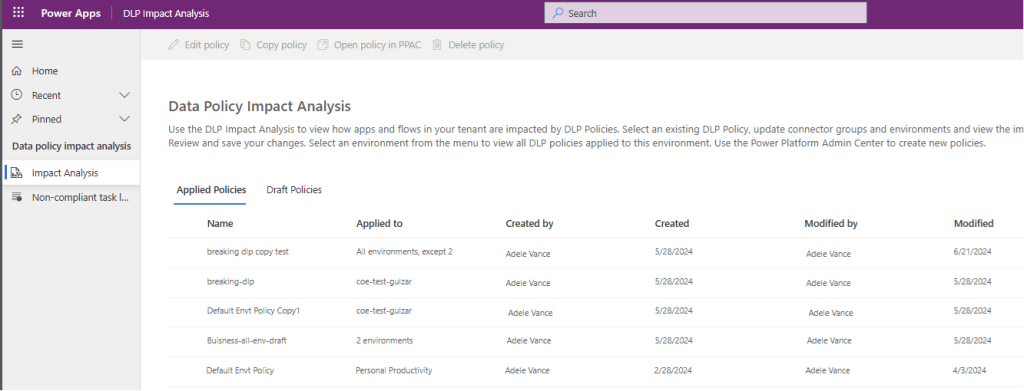

3. Global Restrictions (Admin Center)

To enforce security across the entire environment, administrators can navigate to the Power Platform Admin Center to manage blocked MIME types. Adding an extension to the blocked file extensions list prevents users from uploading those file types to Dataverse tables like Notes or email attachments.

Hope this helps…in next post, I will be talking about Content Security Policy and how Power Platform can be secured using different sets of configuration.

Cheers,

PMDY