Hi Folks,

Thank you for vising my blog today…I believe many of the Consultants or Power Platform professionals out there didn’t know about the HashSet available in .Net since version 3.5.

By the way, what is HashSet..here is a brief about it?

HashSet is a data structure which we mightn’t have come across, neither me until implementing one of my requirements. It offers several benefits compared to other data structures for specific use cases. HashSet is preferred and advantageous, here is a use case where HashSet can be useful than other Data Structures available…followed by Advantages and disadvantages.

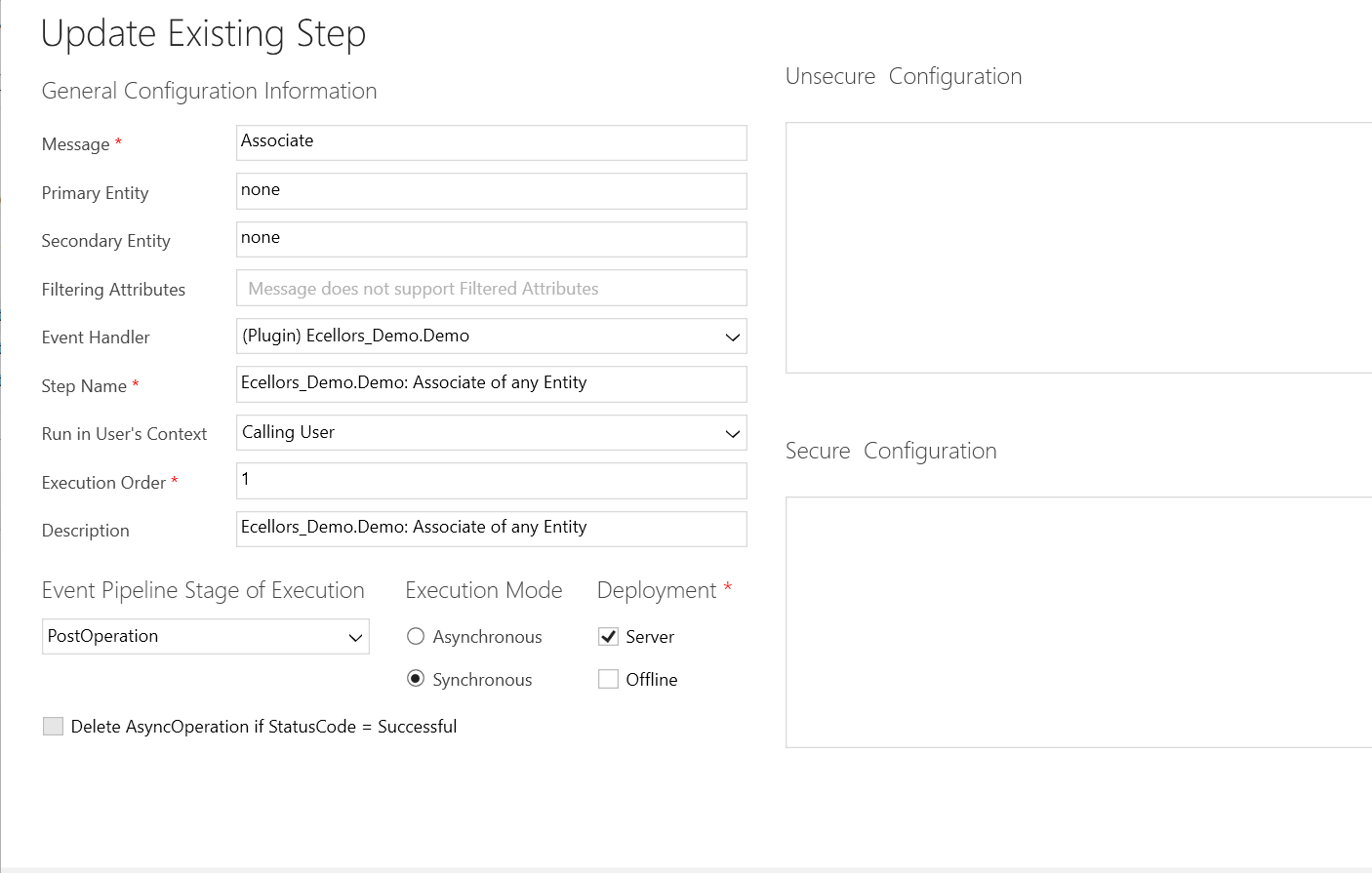

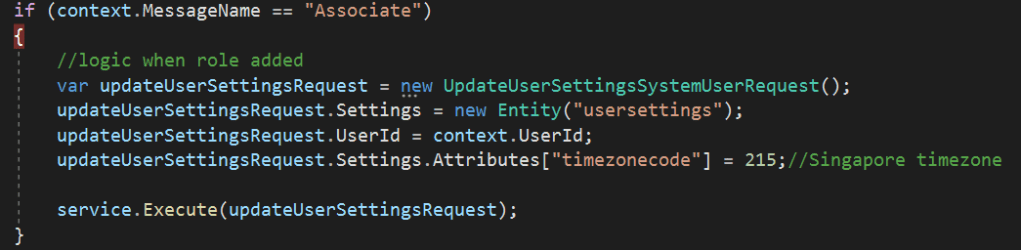

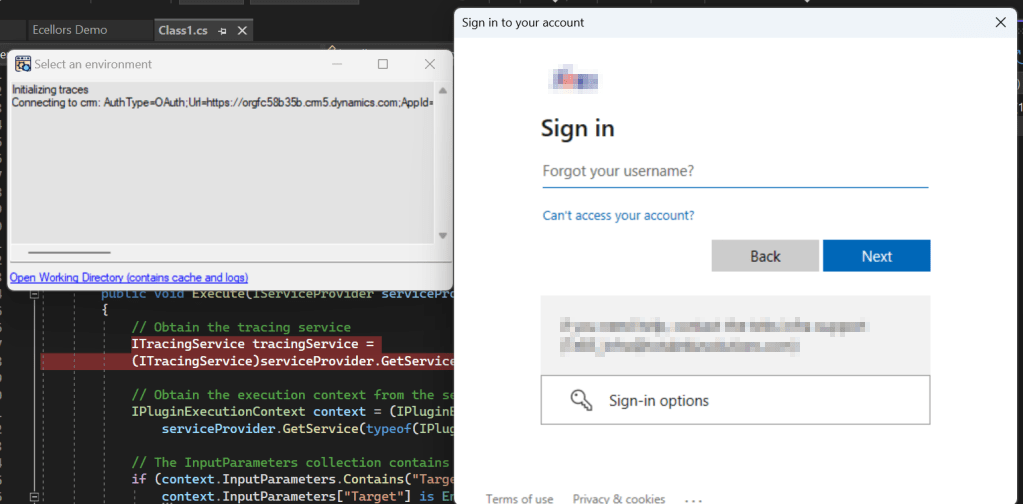

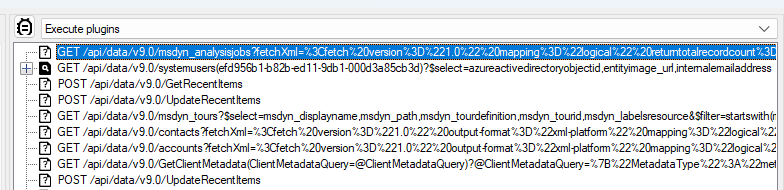

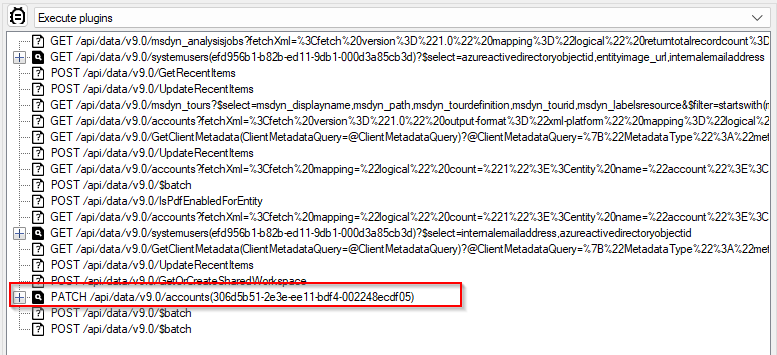

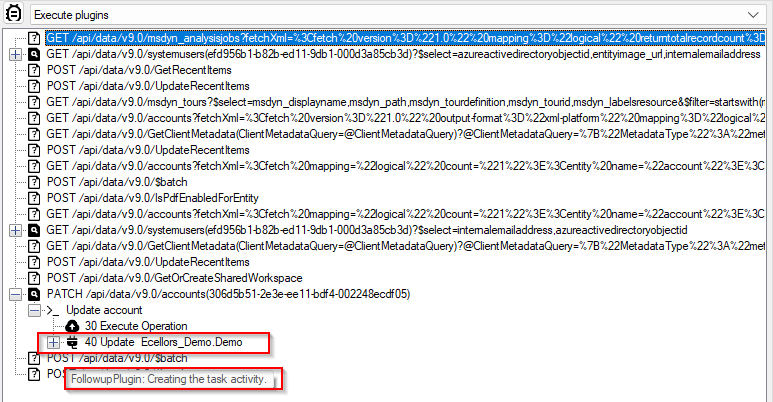

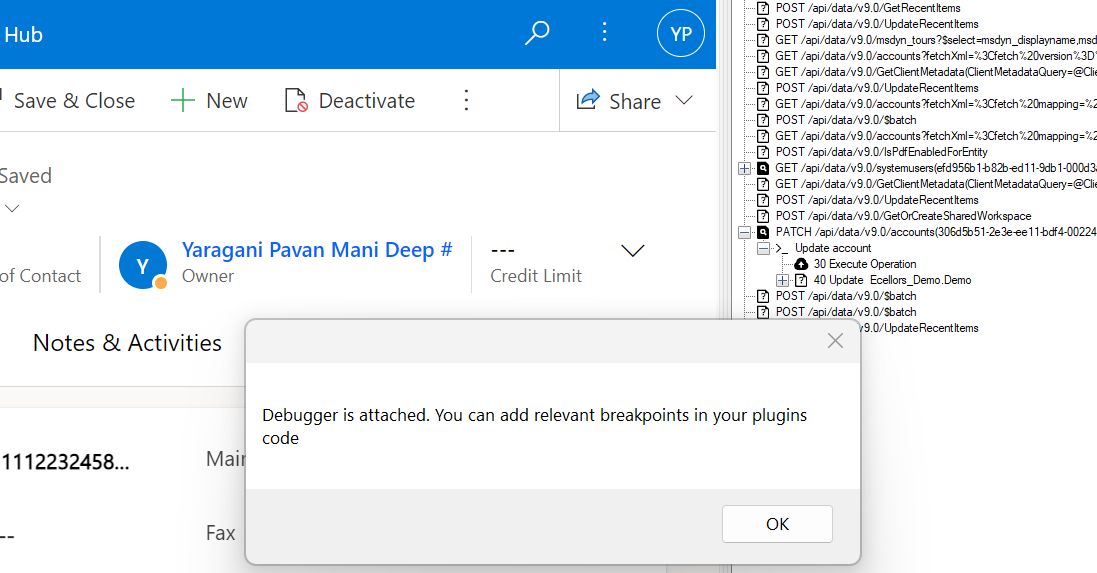

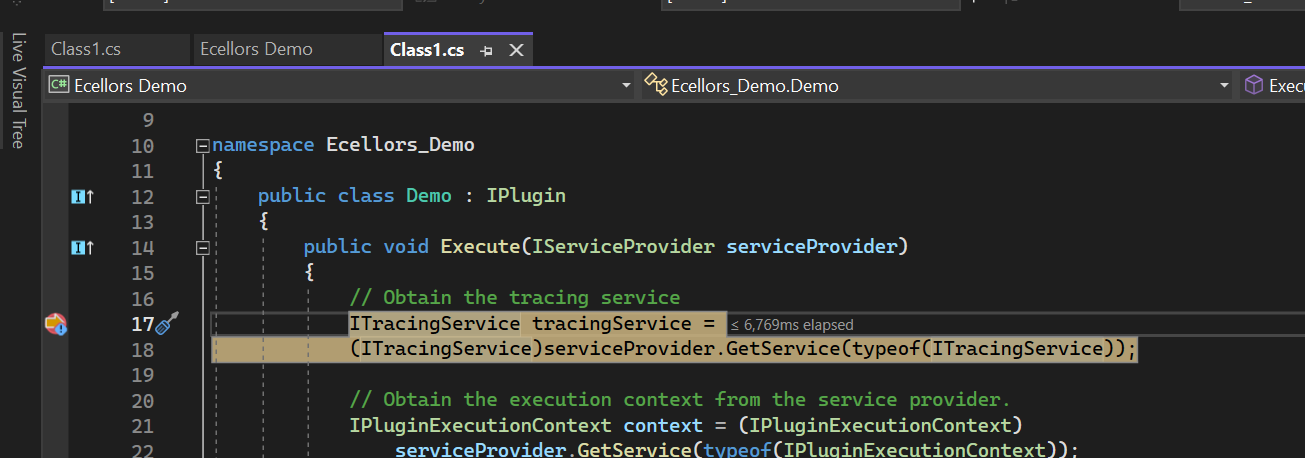

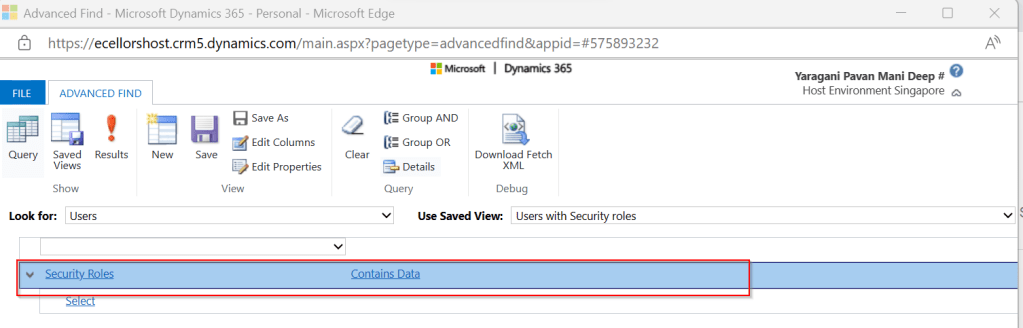

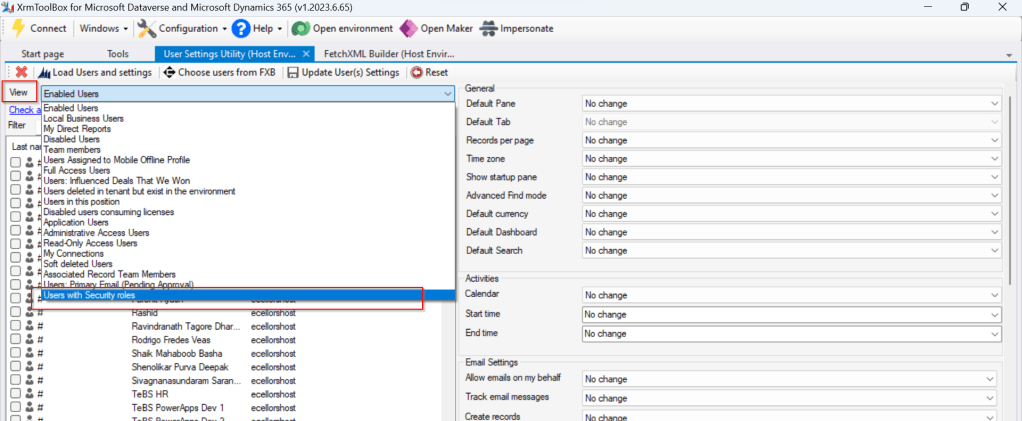

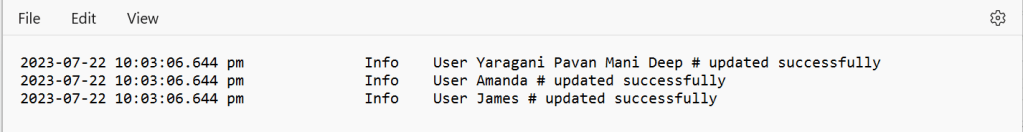

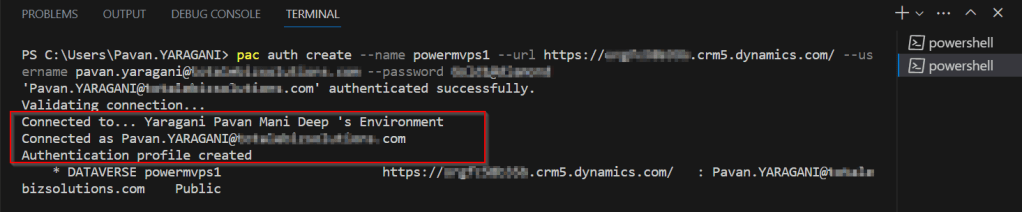

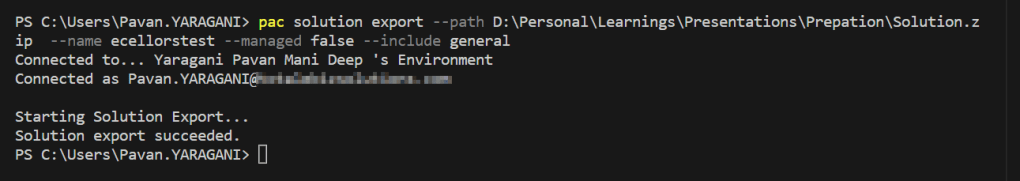

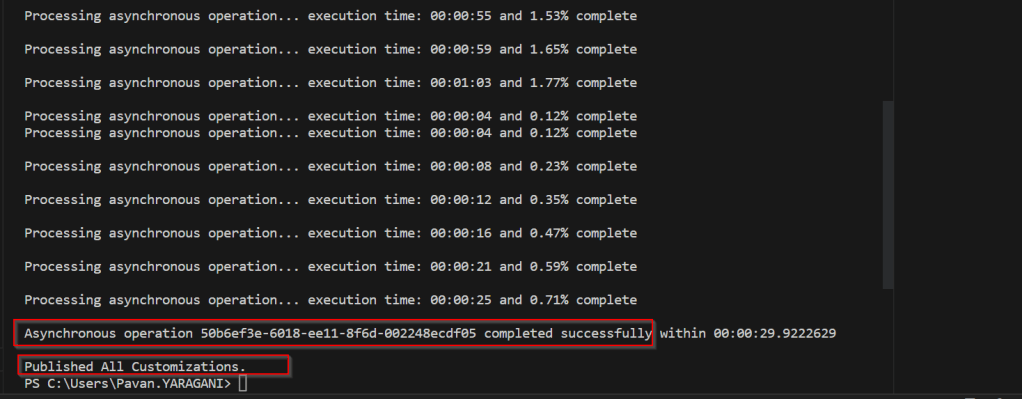

Scenario: I have a requirement where I need to send an email to the owners of the record using Custom workflow when record is updated, I see many numbers of records are having same owner and hence same email addresses are being added to the To activity party which I want to prevent, it is then, I searched and found of this HashSet.

In this way, you can get the owner of the record and add to the HashSet as shown above in the diagram. Also Hash Set can help prevent adding duplicate records making it an ideal way to deal in certain scenarios.

Advantages:

- Fast Lookup: It is efficient for tasks that involve frequent lookups, such as membership checks.

- Uniqueness: All elements are unique. It automatically handles duplicates and maintains a collection of distinct values. This is useful when you need to eliminate duplicates from a collection.

- No Order: It does not maintain any specific order of elements. If the order of elements doesn’t matter for your use case, using a HashSet can be more efficient than other data structures like lists or arrays, which need to maintain a specific order.

- Set Operations: It supports set operations like union, intersection, and difference efficiently and beneficial when you need to compare or combine sets of data, as it can help avoid nested loops and improve performance.

- Hashing: It relies on hashing to store and retrieve elements. Hashing allows for quick data access and is suitable for applications where fast data retrieval is crucial.

- Scalability: It typically scales well with a large number of elements, as long as the hash function is well-distributed, and collisions are minimal.

Limitations include:

- Lack of order: It you need to maintain the order of elements, then this is a good candidate for your implementation.

- Space usage: It is memory intensive and is not recommended when memory optimization is being considered.

- Limited Metadata: It primarily stores keys (or elements), which means you have limited access to associated metadata or values. If you need to associate additional data with keys, you might consider other data structures like HashMap or custom classes.

I hope this gives an overview on using HashSet…however you can’t use Hash Set in all scenarios, it actually depends on your use case, please check the disadvantages too before using it… if you have any questions, don’t hesitate to ask…

Thank you and keep rocking…

Cheers,

PMDY