The Solution blueprint review is covers all required topics. The workshop can also be conducted remotely. When the workshop is done remotely, it is typical to divide the review into several sessions over several days.

The following sections cover the top-level topics of the Solution blueprint review and provide a sampling of the types of questions that are covered in each section.

Program strategy

Program strategy covers the process and structures that will guide the implementation. It also reviews the approach that will be used to capture, validate, and manage requirements, and the plan and schedule for creation and adoption of the solution.

This topic focuses on answering questions such as:

- What are the goals of the implementation, and are they documented, well understood, and can they be measured?

- What is the methodology being used to guide the implementation, and is it well understood by the entire implementation team?

- What is the structure that is in place for the team that will conduct the implementation?

- Are roles and responsibilities of all project roles documented and understood?

- What is the process to manage scope and changes to scope, status, risks, and issues?

- What is the plan and timeline for the implementation?

- What is the approach to managing work within the plan?

- What are the external dependencies and how are they considered in the project plan?

- What are the timelines for planned rollout?

- What is the approach to change management and adoption?

- What is the process for gathering, validating, and approving requirements?

- How and where will requirements be tracked and managed?

- What is the approach for traceability between requirements and other aspects of the implementation (such as testing, training, and so on)?

- What is the process for assessing fits and gaps?

Test strategy

Test strategy covers the various aspects of the implementation that deal with validating that the implemented solution works as defined and will meet the business need.

This topic focuses on answering questions such as:

- What are the phases of testing and how do they build on each other to ensure validation of the solution?

- Who is responsible for defining, building, implementing, and managing testing?

- What is the plan to test performance?

- What is the plan to test security?

- What is the plan to test the cutover process?

- Has a regression testing approach been planned that will allow for efficient uptake of updates?

Business process strategy

Business process strategy considers the underlying business processes (the functionality) that will be implemented on the Microsoft Dynamics 365 platform as part of the solution and how these processes will be used to drive the overall solution design.

This topic focuses on answering questions such as:

- What are the top processes that are in scope for the implementation?

- What is currently known about the general fit for the processes within the Dynamics 365 application set?

- How are processes being managed within the implementation and how do they relate to subsequent areas of the solution such as user stories, requirements, test cases, and training?

- Is the business process implementation schedule documented and understood?

- Are requirements established for offline implementation of business processes?

Based on the processes that are in scope, the solution architect who is conducting the review might ask a series of feature-related questions to gauge complexity or understand potential risks or opportunities to optimize the solution based on the future product roadmap.

Application strategy

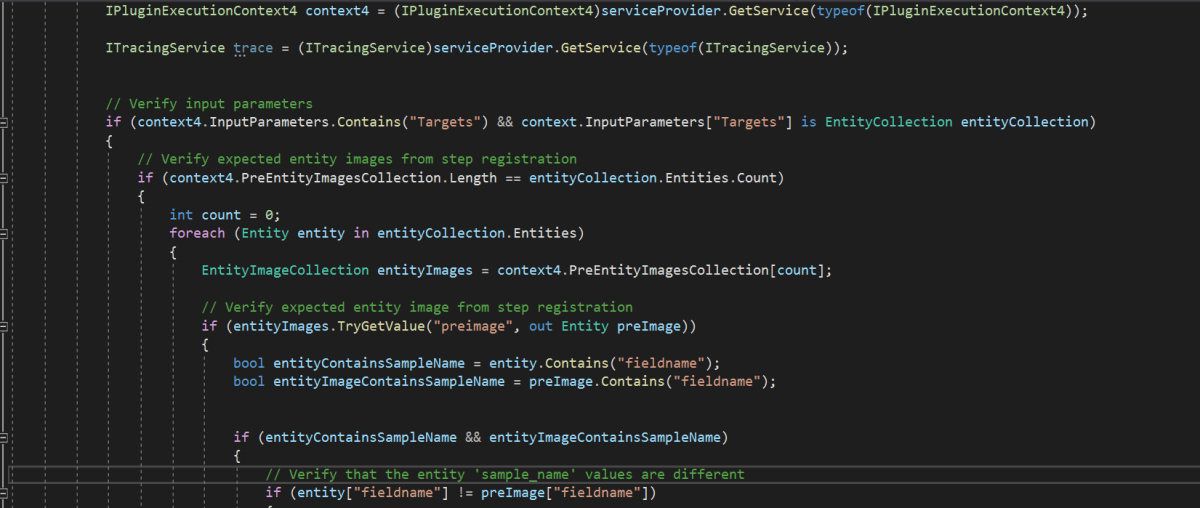

Application strategy considers the various apps, services, and platforms that will make up the overall solution.

This topic focuses on answering questions such as:

- Which Dynamics 365 applications or services will be deployed as part of the solution?

- Which Microsoft Azure capabilities or services will be deployed as part of the solution?

- What if new external application components or services will be deployed as part of the solution?

- What if legacy application components or services will be deployed as part of the solution?

- What extensions to the Dynamics 365 applications and platform are planned?

Data strategy

Data strategy considers the design of the data within the solution and the design for how legacy data will be migrated to the solution.

This topic focuses on answering questions such as:

- What are the plans for key data design issues like legal entity structure and data localization?

- What is the scope and planned flow of key master data entities?

- What is the scope and planned flow of key transactional data entities?

- What is the scope of data migration?

- What is the overall data migration strategy and approach?

- What are the overall volumes of data to be managed within the solution?

- What are the steps that will be taken to optimize data migration performance?

Integration strategy

Integration strategy considers the design of communication and connectivity between the various components of the solution. This strategy includes the application interfaces, middleware, and the processes that are required to manage the operation of the integrations.

This topic focuses on answering questions such as:

- What is the scope of the integration design at an interface/interchange level?

- What are the known non-functional requirements, like transaction volumes and connection modes, for each interface?

- What are the design patterns that have been identified for use in implementing interfaces?

- What are the design patterns that have been identified for managing integrations?

- What middleware components are planned to be used within the solution?

Business intelligence strategy

Business intelligence strategy considers the design of the business intelligence features of the solution. This strategy includes traditional reporting and analytics. It includes the use of reporting and analytics features within the Dynamics 365 components and external components that will connect to Dynamics 365 data.

This topic focuses on answering questions such as:

- What are the processes within the solution that depend on reporting and analytics capabilities?

- What are the sources of data in the solution that will drive reporting and analytics?

- What are the capabilities and constraints of these data sources?

- What are the requirements for data movement across solution components to facilitate analytics and reporting?

- What solution components have been identified to support reporting and analytics requirements?

- What are the requirements to combine enterprise data from multiple systems/sources, and what does that strategy look like?

Security strategy

Security strategy considers the design of security within the Dynamics 365 components of the solution and the other Microsoft Azure and external solution components.

This topic focuses on answering questions such as:

- What is the overall authentication strategy for the solution? Does it comply with the constraints of the Dynamics 365 platform?

- What is the design of the tenant and directory structures within Azure?

- Do unusual authentication needs exist, and what are the design patterns that will be used to solve them?

- Do extraordinary encryption needs exist, and what are the design patterns that will be used to solve them?

- Are data privacy or residency requirements established, and what are the design patterns that will be used to solve them?

- Are extraordinary requirements established for row-level security, and what are the design patterns that will be used to solve them?

- Are requirements in place for security validation or other compliance requirements, and what are the plans to address them?

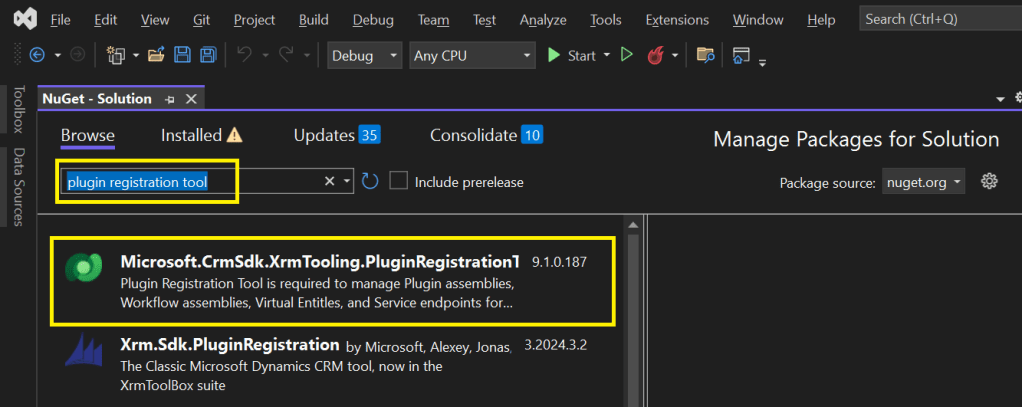

Application lifecycle management strategy

Application lifecycle management (ALM) strategy considers those aspects of the solution that are related to how the solution is developed and how it will be maintained given that the Dynamics 365 apps are managed through continuous update.

This topic focuses on answering questions such as:

- What is the preproduction environment strategy, and how does it support the implementation approach?

- Does the environment strategy support the requirements of continuous update?

- What plan for Azure DevOps will be used to support the implementation?

- Does the implementation team understand the continuous update approach that is followed by Dynamics 365 and any other cloud services in the solution?

- Does the planned ALM approach consider continuous update?

- Who is responsible for managing the continuous update process?

- Does the implementation team understand how continuous update will affect go-live events, and is a plan in place to optimize versions and updates to ensure supportability and stability during all phases?

- Does the ALM approach include the management of configurations and extensions?

Environment and capacity strategy

Deployment architecture considers those aspects of the solution that are related to cloud infrastructure, environments, and the processes that are involved in operating the cloud solution.

This topic focuses on answering questions such as:

- Has a determination been made about the number of production environments that will be deployed, and what are the factors that went into that decision?

- What are the business continuance requirements for the solution, and do all solution components meet those requirements?

- What are the master data and transactional processing volume requirements?

- What locations will users access the solution from?

- What are the network structures that are in place to provide connectivity to the solution?

- Are requirements in place for mobile clients or the use of other specific client technologies?

- Are the licensing requirements for the instances and supporting interfaces understood?

Solution blueprint is very essential for an effective Solution Architecture, using the above guiding principles will help in this process.

Thank you for reading…

Hope this helps…

Cheers,

PMDY