Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.

Subscribe to get access to the rest of this post and other subscriber-only content.

Subscribe to get access to the rest of this post and other subscriber-only content.

Hi Folks,

Yesterday I was working with Azure DevOps repository for my project. My local Develop branch is behind the Main branch by few commits, I want to get the latest commits into my local and include those from the Main branch also in to my Develop branch.

It is then I got to know whether to use GIT Merge Command or GIT Rebase Command. And here you go my findings on the same….

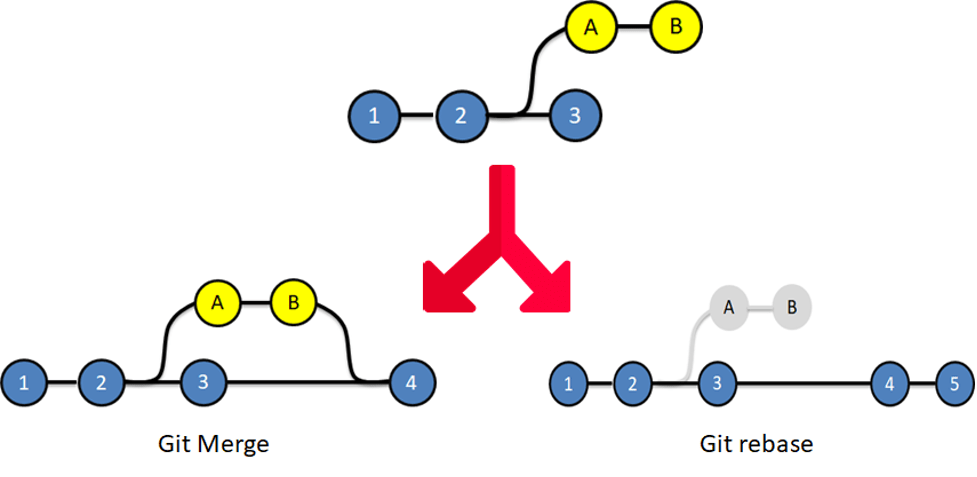

I got to know the details, official Git manual states that rebase “reapplies commits on top of another base branch”, whereas merge “joins two or more development histories together”. In other words, the key difference between merge and rebase is that while merge preserves history as it happened, rebase rewrites it. Let’s start with an illustration…for better understanding…followed by the differences…

| Merge | Rebase |

| Git merge is a command that allows you to merge branches from Git. | Git rebase is a command that allows developers to integrate changes from one branch to another. |

| In Git Merge logs will be showing the complete history of the merging of commits. | Logs are linear in Git rebase as the commits are rebased |

| All the commits on the feature branch will be combined as a single commit in the master branch. | All the commits will be rebased and the same number of commits will be added to the master branch. |

| Git Merge is used when the target branch is shared branch | Git Rebase should be used when the target branch is private branch |

Actually talking both do mean the same purpose and have their own uses, but at the end of the day it is completely up to your ALM strategy/methodology. I preferred to use Merge instead of Rebase just that my branch is shared branch and for simplicity.

Hope this helps…

Cheers,

PMDY

Hi Folks,

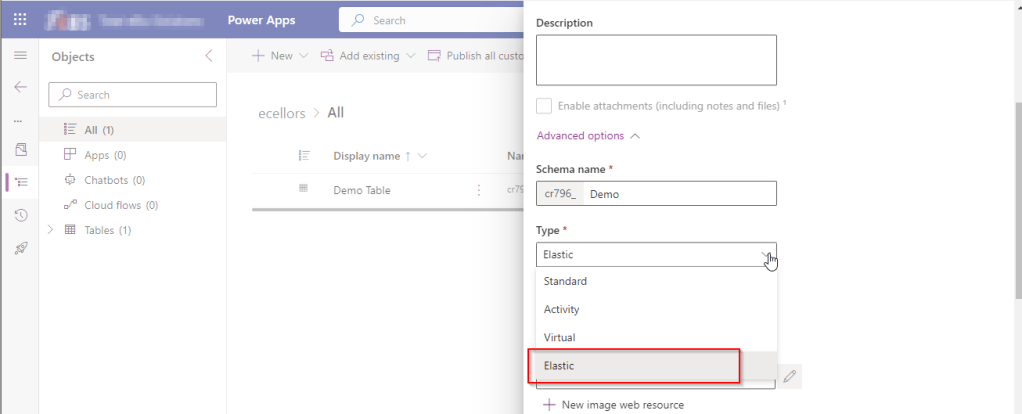

While you may have noticed this or not, but it’s real. Now Dynamics 365 CE existing table types have a new companion called Elastic, it is yet to be announced.

However let’s take a quick look of the table types showing up when you were trying to create a new one in Dataverse.

While everyone is aware about Standard, Activity, Virtual types in Model Driven Apps. Elastic tables are new tables which came in to Dataverse and probably it will be announced in the upcoming Microsoft Build 2023.

From my view, Elastic tables were

1. Built similar to the concept of Elastic Queries in Azure which is usually meant for the purposes of Data archiving needs.

2. You can scale out queries to large data tiers and visualize the results in business intelligence (BI) reports.

3. Elastic Query provides a complete T-SQL Database Querying capability on Azure SQL, possibly Dataverse.

Hope we get all the capabilities released with Elastic Queries of Azure SQL be released in Dataverse as well.

References:

Data Types in Model Driven Apps

Cheers,

PMDY

Hi Folks,

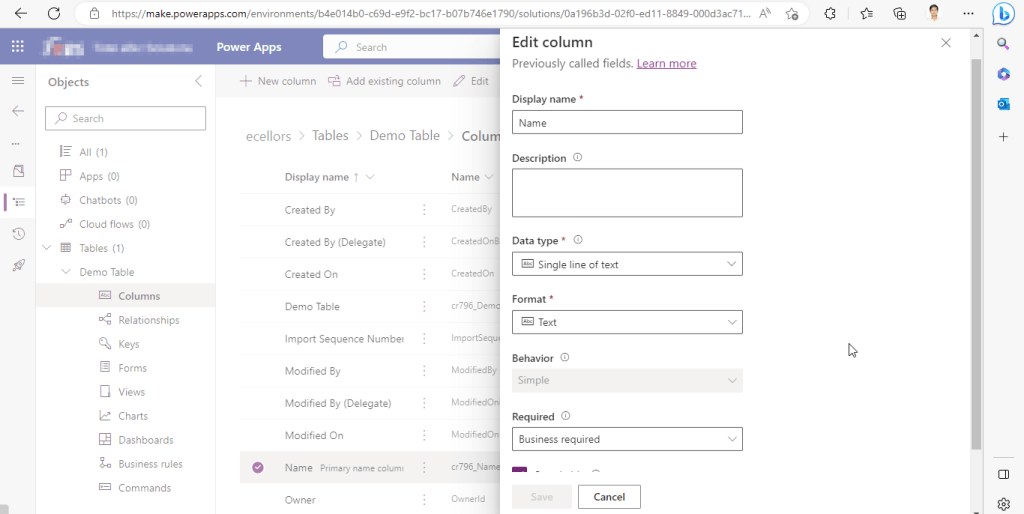

Do you know you can change the Data type of an Primary column between Single Line of Text and Autonumber even after creation of your entity specifying a defined Primary Name Column. There is a catch….

So let’s see…

I first created a brand new Table called Demo Table and kept the Primary Column as Single Line of text. Earlier once the table is created, you will not be able to change the Primary name column if you wish to, the only way was to delete the table and re-create it with the correct type. But now you can change the type of the column at least to a unique autonumbering.

I want the Primary Name column to be unique, but when I look at the data in my table captured, I see many duplicates.

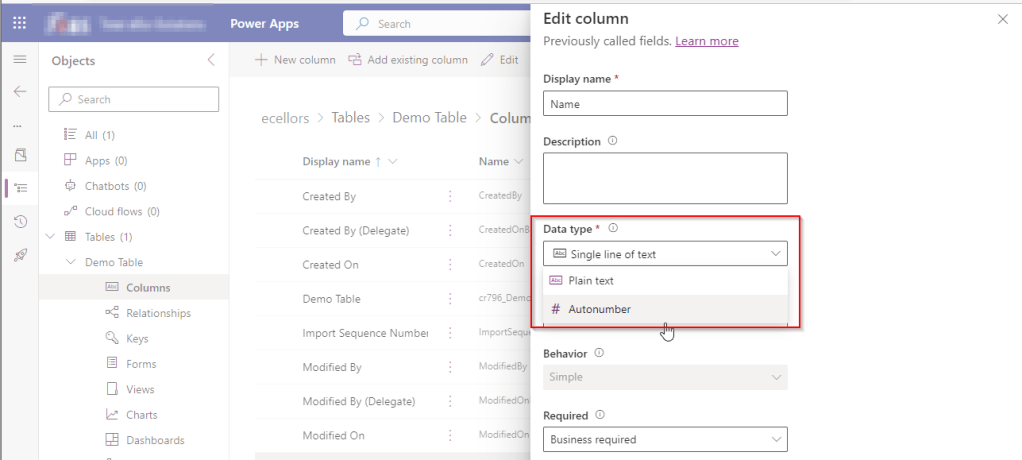

So let’s change the data type of the primary column data type to Autonumber.

The primary field look as below initially…

Select the Data Type available…

Now Select the Autonumber from the drop down available…you can optionally specify any custom prefix which you want for your Autonumber…and click Save and publish the customizations.

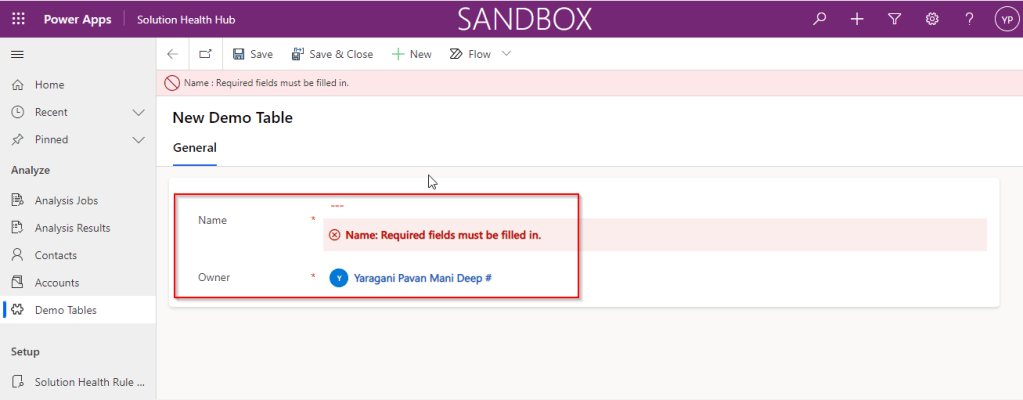

Now go back to your model driven app and then try creating a new record for the respective entity.

Since it was a primary field column, it is by default made mandatory…what’s up…the Autonumber column data type change is not reflecting….this is the same even if you check and publish the solution multiple times. Neither you can’t specify the field value because you already choose this to be an Autonumber and system should create it by itself.

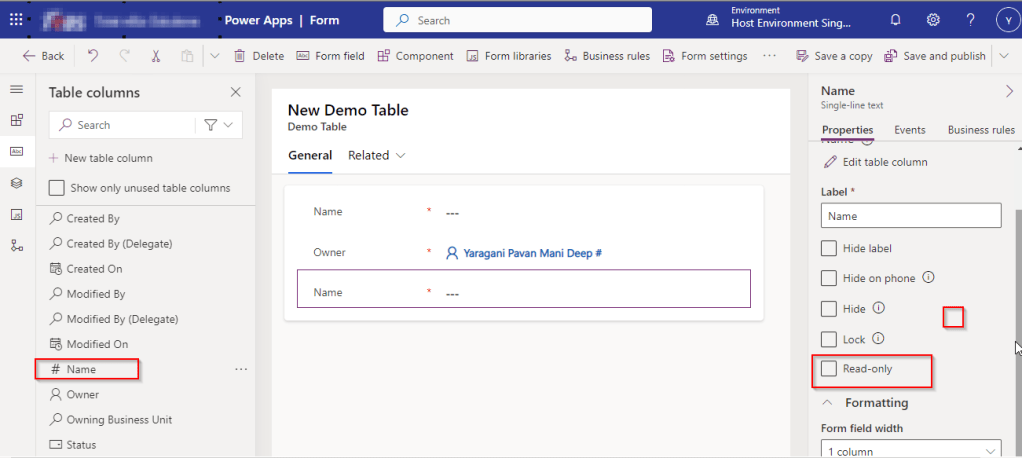

If you were scratching your head, then this simple tip will help…

Just make the field read-only from the form where this field is being referred, so you don’t need to really enter value for it…then publish the customizations.

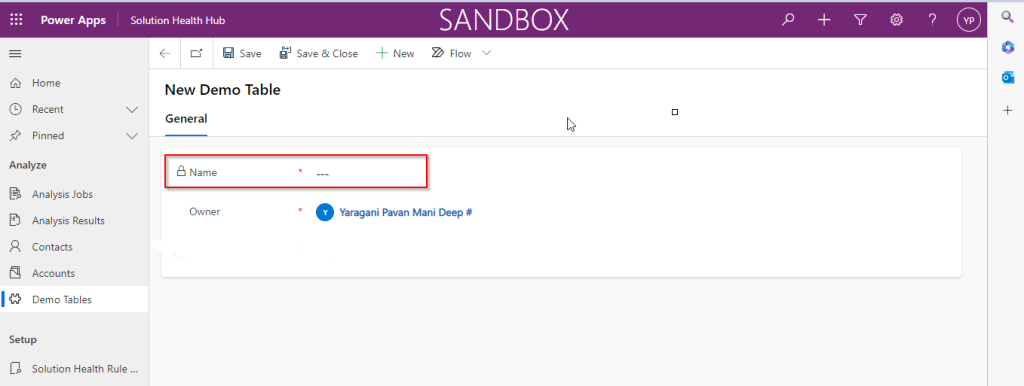

Once you have done…

Now try to save the record..

There you go, you can see an Autonumber being populated in the primary field…

Cheers,

PMDY

Hi Folks,

As a Power Platform Admin/Consultant…did you often worry about your Power Platform Request Limits and usage left…? Do you receive warning messages from Microsoft regarding the usage of your database exceeded..? Want to see what are Custom Plugin Errors encountered while using your Model Driven App targeting Dataverse….then want to consolidate them and forward to your team to look into the issues without much efforts….then you were in the right place…

Just login with your credentials to admin page https://admin.powerplatform.microsoft.com/.

Expand Resources to the left…to find the Capacity menu

If you just want to know only the data usage, then you can ahead and click on Download as shown above to get one.

Want to get in depth analysis…then click on Details as highlighted in the same snap above.

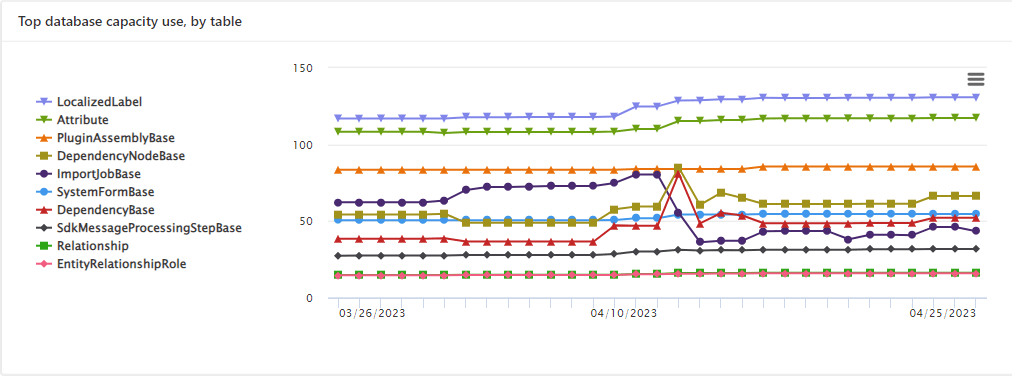

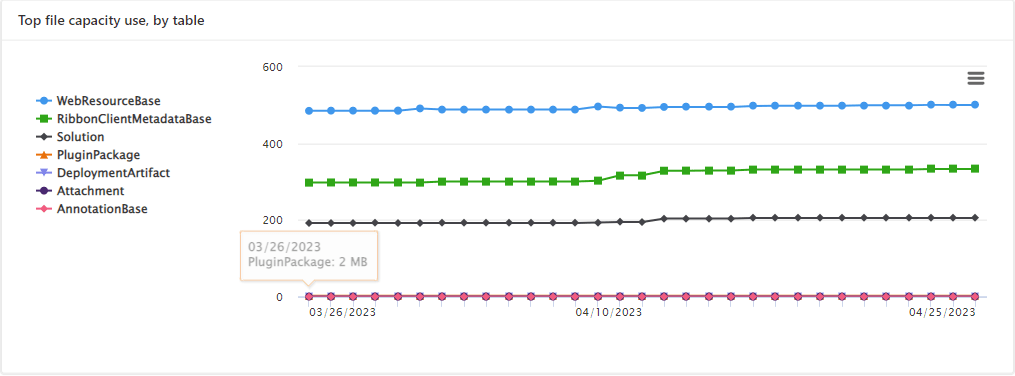

This page shows your Database usage/File usage and respective categorizations by table as below..

These are reports which I was able to extract from my trial environment, however all the reports were not available currently in my region. Yes, this is expected as this feature is still in preview and not recommended for Production Projects as of now. Definitely in the future…

Note:

Hope you found this post helpful…

Cheers,

PMDY

Hi Folks,

In this blog post, I will talk about implementing a custom page for your implementations.

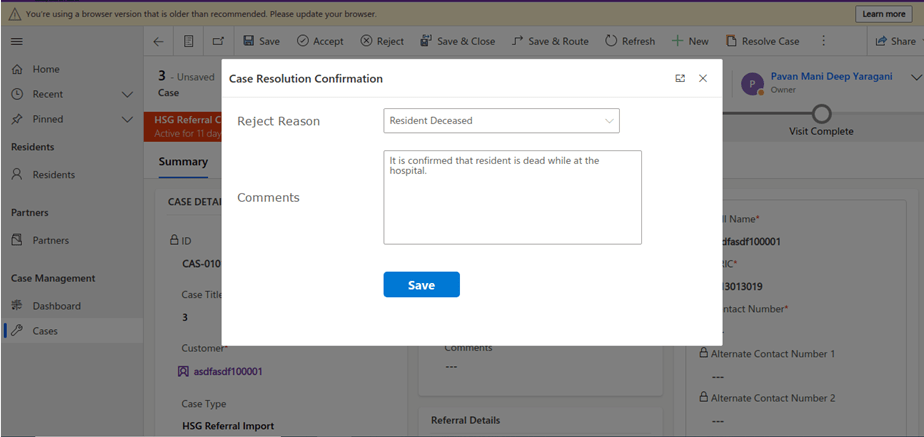

Here in our use case, customer want to see a pop up dialog box where they can reject the cases from a button and when reject is clicked, there should be a dialog box to capture the reject reason and comments and update them back to the record. So for this we had to implement a custom page and called from a Ribbon button. If you just want to show an alert, you can very easily implement using JavaScript with the help of OOB Alert Dialog…

Xrm.Navigation.openAlertDialog(alertStrings,alertOptions).then(closeCallback,errorCallback);

But in case as user want to update entity details like optionset field, directly from the pop up, you should consider using the approach as we did using a custom page.

.

All we have used is JavaScript, Ribbon Workbench and Custom Page…First is to design the custom page in https://make.powerapps.com/

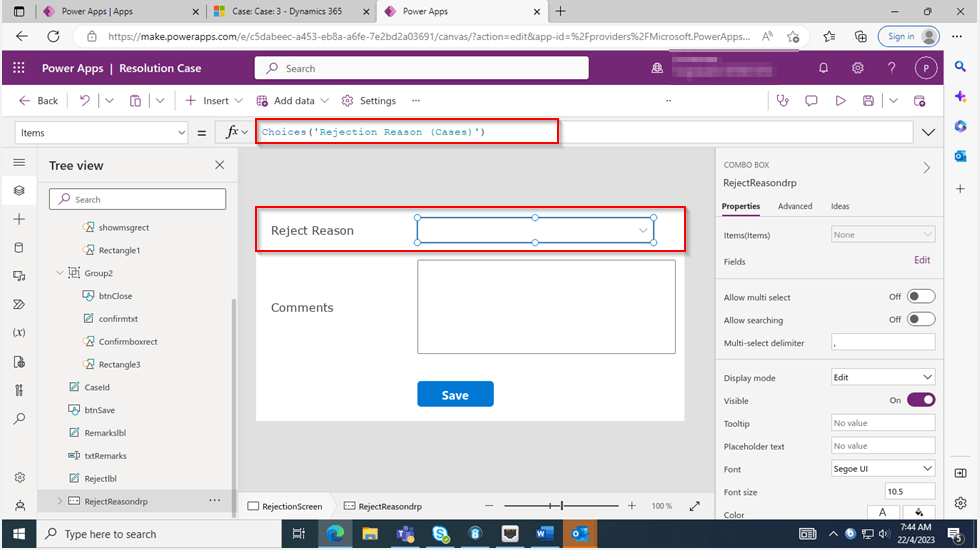

The optionset for Reject Reason is bound to the Reject Reason combo box using the below property.

On the App start, we will set the parameter with what we have supplied from the ribbon on-click function.

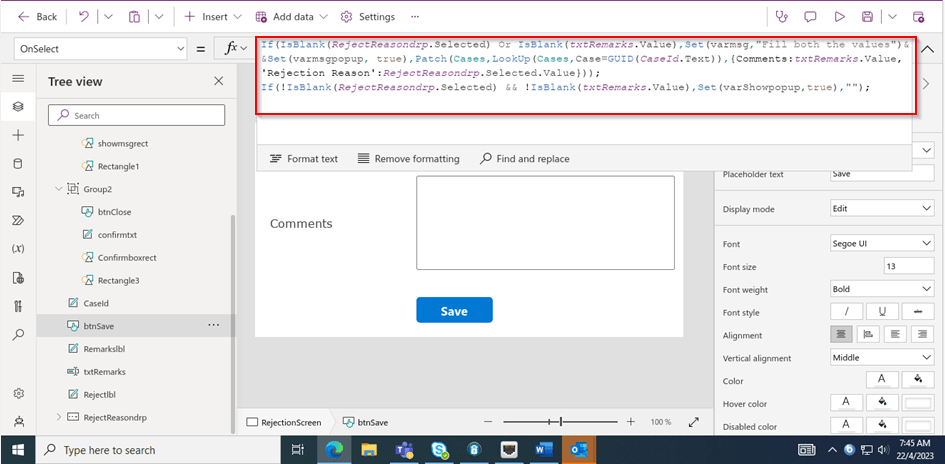

On the OnSelect property of the Save button, we can use the below function

Function:

Here’s the js code for the button OnClick Event…

Here the ribbon workbench customization added…

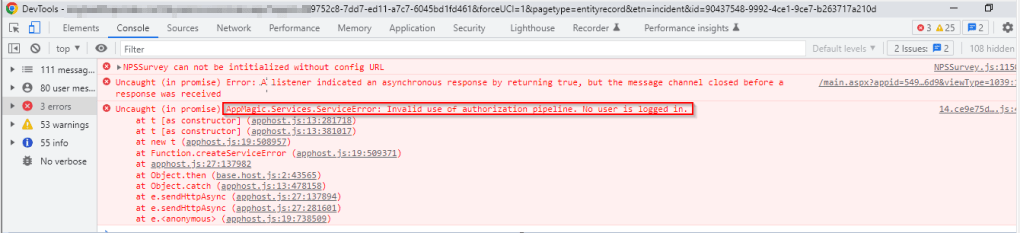

Finally publish the customizations and add the custom page to the model driven app…don’t forget to add this to your app as this mandatory to get the authorization to your page as below, else you see below error in developer tools of your browser as below…and no custom page opens up…

That’s it…when a Reject is clicked, you should a see a page as below..

Upon entering the details as above, you will be shown a confirmation screen as below..

Once you click on Close, the selected details will be updated back in the record.

Hope this helps someone implementing custom page for a similar requirement….

Cheers,

PMDY

Hi Folks,

This blog is just to let you know why you should stop implementing OData calls using V2.0 version. I am pretty sure almost every Dynamics CE project out there have used this OData calls definitely in their implementations from quite some time. While some of new implementations have replaced the logic using Web API, still some people go with using OData V2.0 calls to build their functionality using JavaScript.

Microsoft had actually planned to remove this endpoint from April 30, 2023. But they deferred this because many projects are’nt yet prepared for removal of this end point and help the customers prepare for this transition to Web API end point.

Identify if you still using OData V2.0 end point, actually Organization Data Service is an OData V2.0 endpoint which was introduced with Dynamics CRM 2011..it’s deprecated way back with Dynamics 365 CE version 8.0.

So now, how to identify where and all you were using OData End Points in your code…you shouldn’t expect that existing code will work with only minor changes and this work can be taken at a later stage. This was a high priority warning message from Microsoft stating the removal, so I urge all of you to be prepared for this removal very soon and you shouldn’t be surprised.

So where to change…..?

Below are the places where you should change your way of implementation and align with Microsoft…

/XRMServices/2011/OrganizationData.svc in Javascript, you can find it out with the help of the checker service rule web-avoid-crm2011-service-odata for identification. This can be code which was making OData calls to perform CRUD Operations on the current table or related table./xrmservices/2011/organizationdata.svc.Note:

This announcement does not involve the deprecated Organization Service SOAP endpoint, meaning using Organization service in plugins. At this time, no date has been announced for the removal of that endpoint. At the time of writing this blog post, Microsoft didn’t announce whether this removal is only for Online or On Premise Versions.

References:

How to use Application Insights to identify usage of the OrganizationData.svc endpoint?

OData v2.0 Service removal date announcement

The Clock is Ticking on Your Endpoint

Do not use the OData v2.0 endpoint

Hope this saves time and effort implementing your Dynamics CE Solutions…

Cheers,

PMDY

Hi Folks,

This blog post isn’t about any use case, rather it just highlights the importance and benefits of designing your data model for your reporting requirements. Every Power BI Developer should consider this at first place.

When designing your Power BI Reports, Data Modelling is the first step whenever you want to work with the Power BI dashboards or reports which plays a very key role. Coming to Schemas, I can say there are two schemas namely Star Schema and Snow Flake Schema. This blog post mainly talks about Star Schema for your Power BI Report/Dashboard design.

With Star Schema, Power BI data models are optimized for performance and usability. While every consultant try to create stunning visuals, they also need to focus on their data model before spending time on their report design.

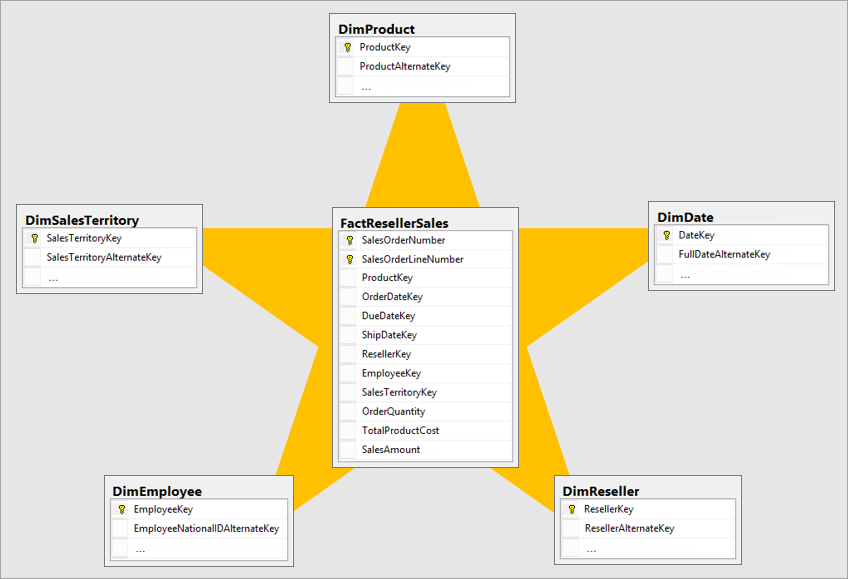

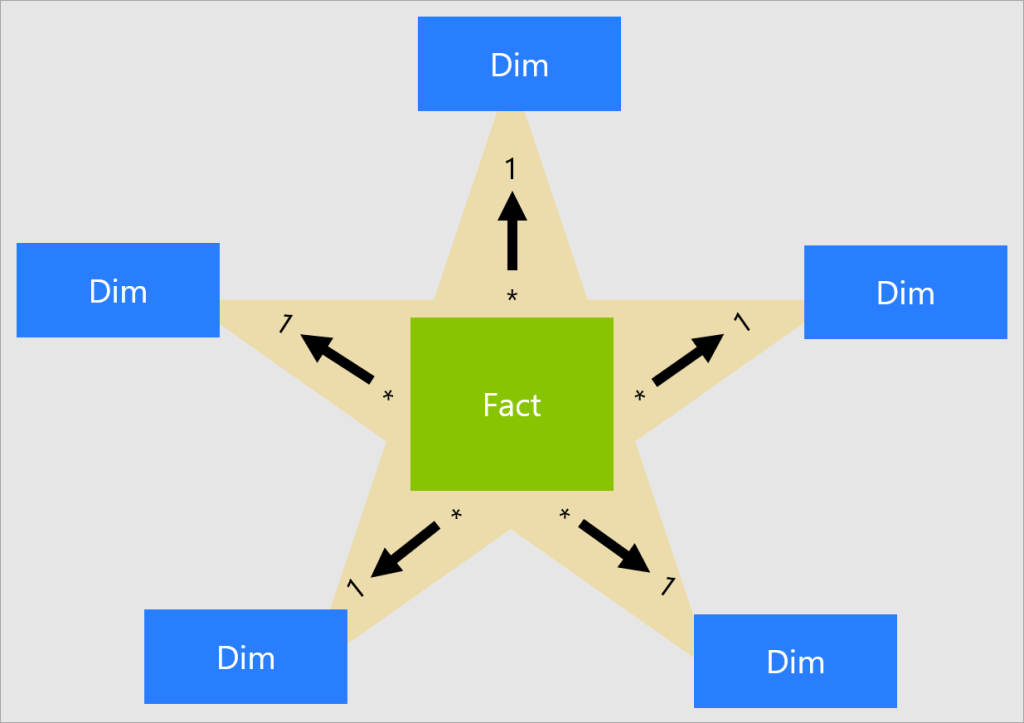

Star Schema revolves around 2 types of tables in general, they were Fact tables and Dimension tables(talks about the business entities).

Fact table is central table in star schema. Dimension table are the tables which were connected to Fact table using a one-to-many or many-to-one relationship. Generally, dimension tables contain a relatively small number of rows. Fact tables, on the other hand, can contain a very large number of rows and continue to grow over time. So now let’s see how a star schema looks like and taken from Adventure works sample.

Main point to note here is Normalization and Denormalization capabilities which are two great concepts to understand how star schema can help increase the performance of your dataset.

Star schema requires normalized tables and SnowFlake Schema needs denormalized tables. The design fits well with star schema principles:

You can visualize the relationship as per the below diagram…

These concepts include, I will brief about the below topics which were not widely popular yet must know for designing an efficient Power BI Dataset.

Last but not the least, I should say that following and designing your data model using Star Schema is a best practice suggested by Microsoft.

You can lookout for references if you want to see video which can be of great help for you to understand the star schema mainly for beginners…

References:

Star Schema Data Model in Power BI

Hope this helps…

Cheers,

PMDY

Reblogged on ecellorscrm.com…

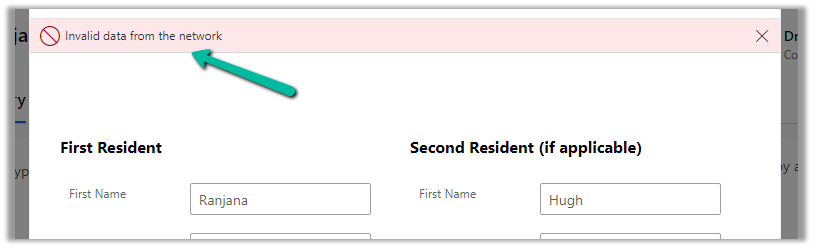

Recently in one of our custom pages, we were getting the below error

“Invalid data from the network”

We had this setting “Formula-level error management” as On, switching it off was hiding this error on the page.

To fix it we tried removing the fields one by one to figure out the issue. Eventually, we saw the error was going away if we remove one of the option set fields from the gallery or comment it’s formula. Later when we removed and added the field back in the gallery and the error got fixed for us.

The page loading properly without the error –

Hope it helps..