Hello Folks,

I believe ever Power Platform professional working on Dataverse had one or other time got a chance to work on calculated fields. Actually it provides an easy way to perform any calculations for the supported data types since it has been introduced with CRM Version 2015 update 1.

Here is a very simple example of simple calculation to get your Fx data type up and running in few seconds….follow along….

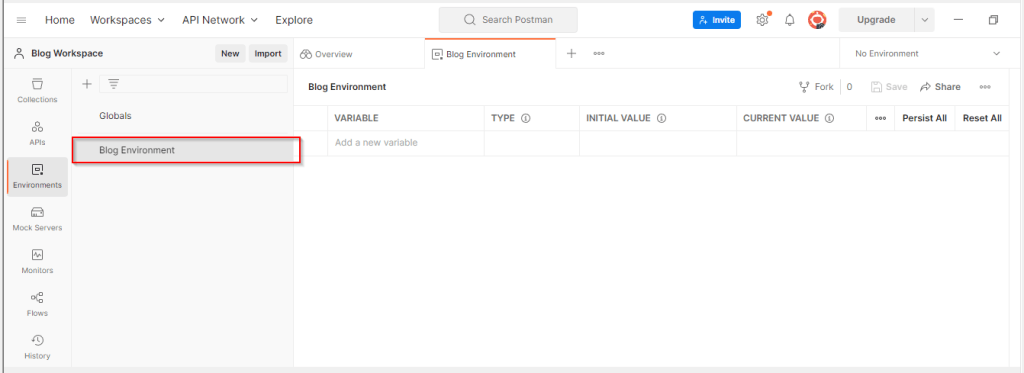

Navigate to https://make.powerapps.com/

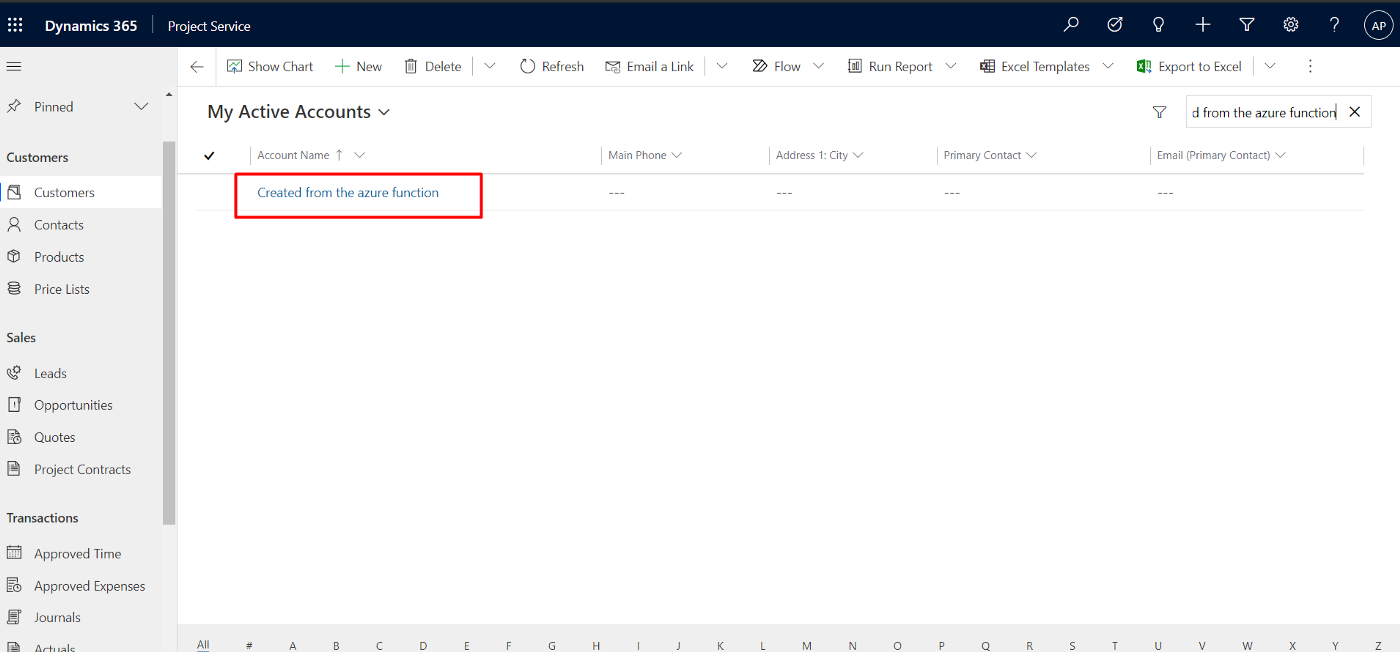

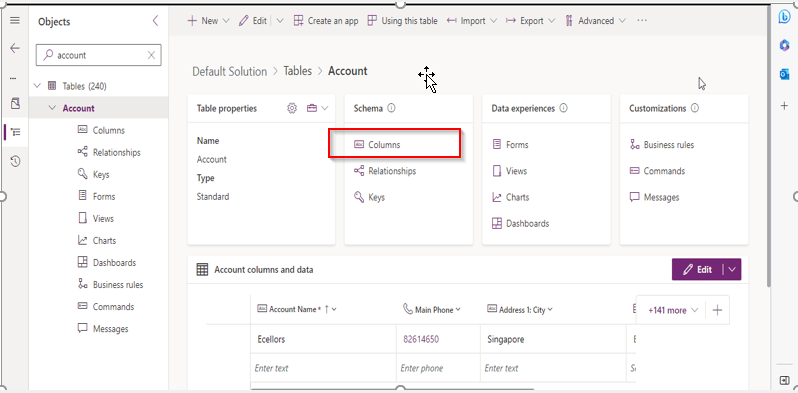

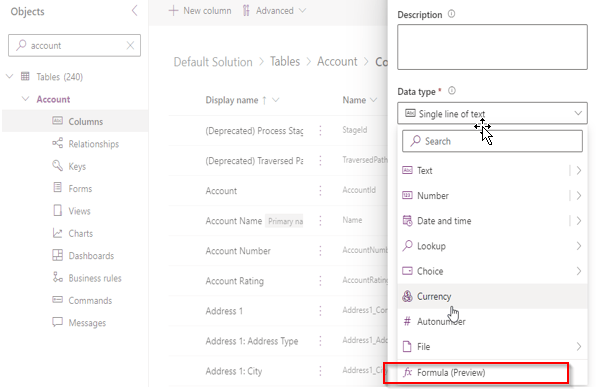

Open your solution, navigate to the columns in any table….for simplicity I am taking example of Accounts table…

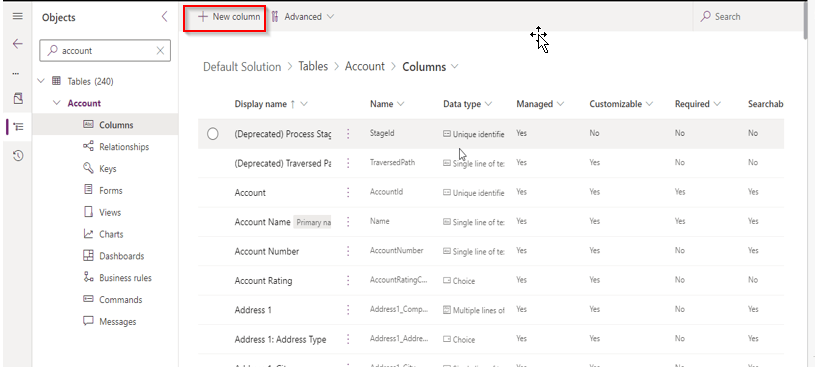

Now create new column as below

Key values for the field, make a note that the data type (Fx) is selected

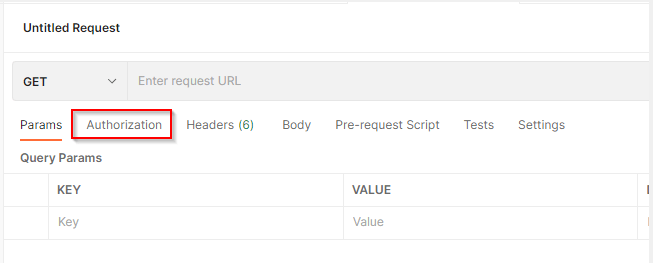

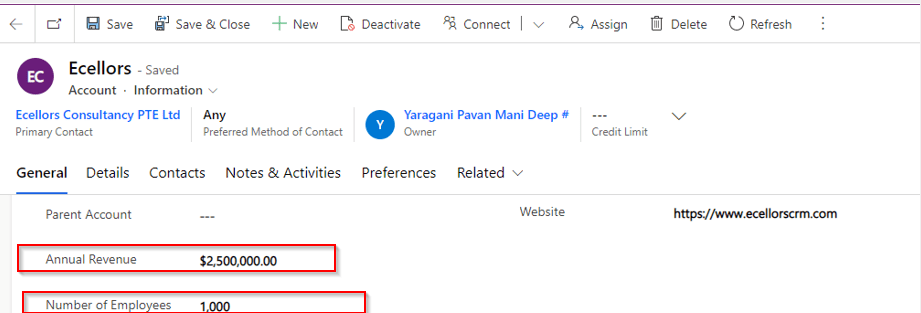

I already have two fields as below already on the form for calculating the Annual revenue per Employee from Annual Revenue of the company…

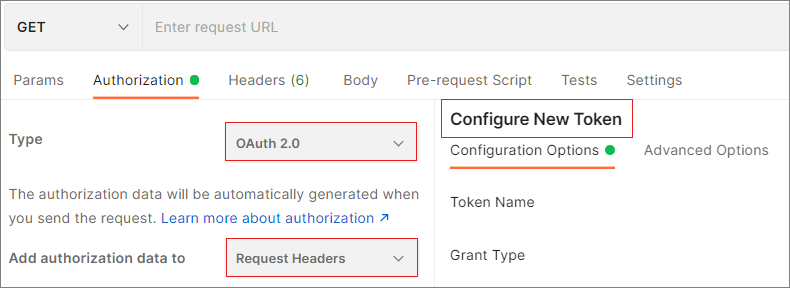

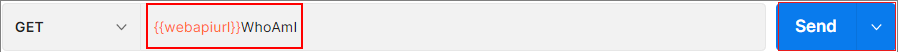

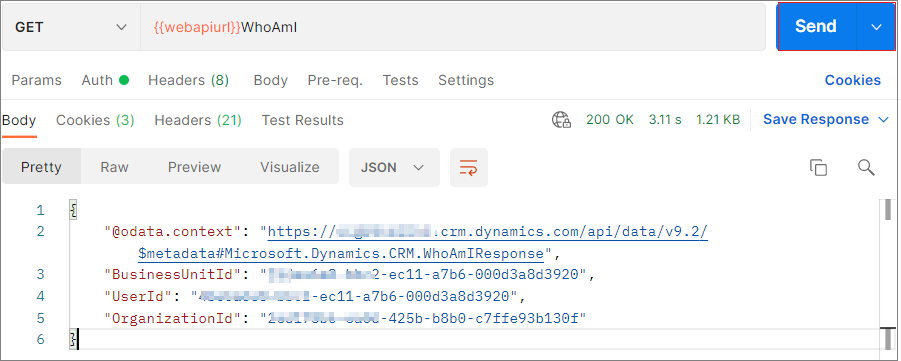

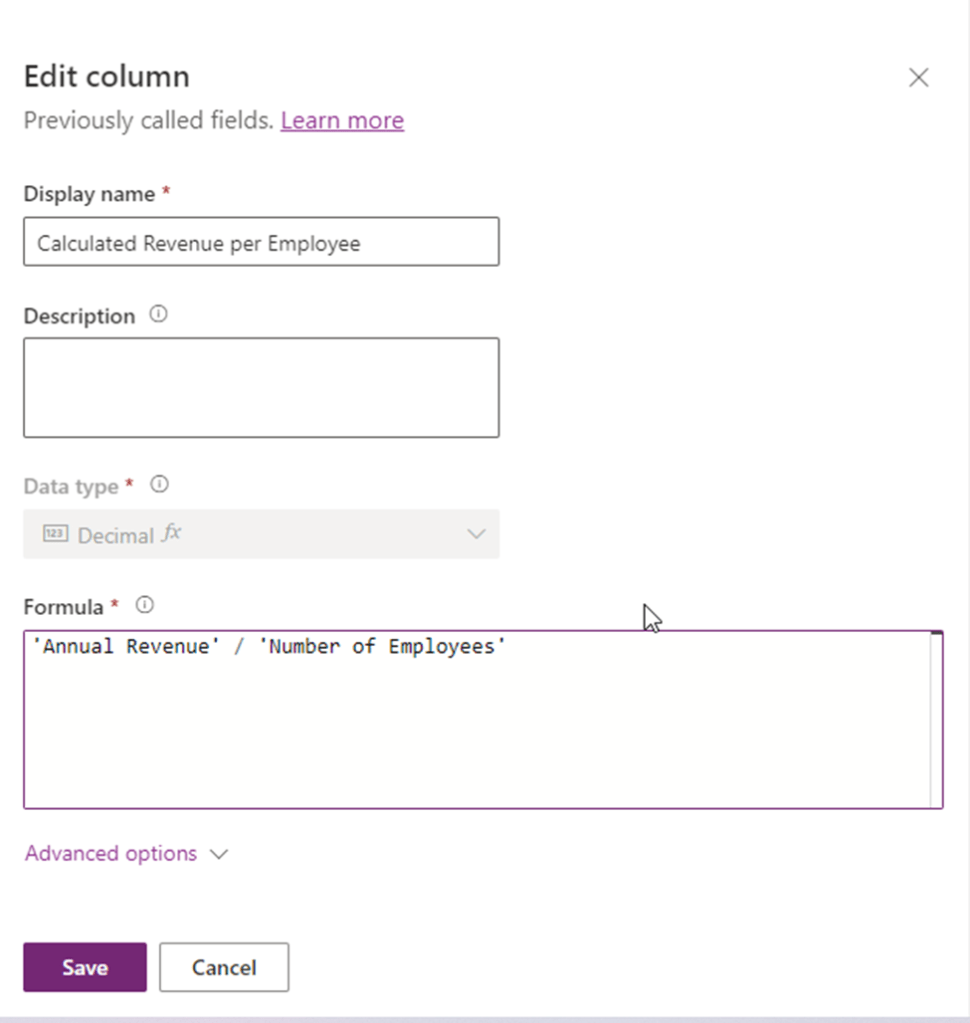

So now let’s write a simple Power Fx formula to calculate the Annual Revenue per Employee…the expression goes as below…

Annual Revenue is a currency field and Number of Employees field is single line of text. As soon as you save, system automatically identifies the data type as Decimal Number as shown above, click on save and publish the form…

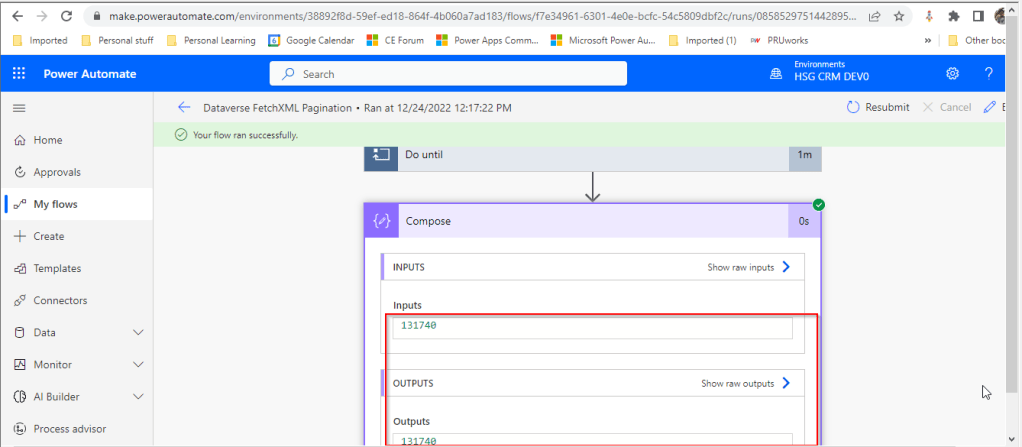

Let’s see the actual use in the form…as soon as you enter the values for Annual Revenue and Number of Employees and save, the value for Calculated Revenue for the Employee field value will be calculated by the Power Fx expression.

Hope this will be useful in future for your implementations…

Points to keep in view:

- This formula column is in preview right now at the time of writing this blog post.

- And currently, formula columns can’t be used in roll-up fields or with plugins.

- You can use the following operators in a formula column:

+, -, *, /, %, ^, in, exactin, & - Microsoft Documentation says that the Currency data type isn’t currently supported but it works actually.

- The Text and Value functions only work with whole numbers, where no decimal separator is involved

Ref: Formula Column

Cheers,

PMDY

![Power Fx Fomula Data type – your new companion in addition to Calculated fields in Dataverse [Insight]](https://ecellorscrm.com/wp-content/uploads/2023/03/power-fx-template.png?w=1200)