Hi Folks,

This is a blog post series on Canvas Apps where you can learn and grow from Zero – Hero in Canvas Power Apps…and boost your knowledge on Canvas Apps.

- Introduction to Canvas Apps – What they are, why they matter, and real-world use cases.

- Setting Up Your First Canvas App – Step-by-step guide for beginners.

- Understanding Screens and Navigation – How to structure an app with multiple screens.

- Working with Data Sources – Connecting to SharePoint, Dataverse, Excel, and other sources.

- Forms and Galleries – Displaying and capturing data effectively.

- Mastering Power Fx – Key formulas and best practices.

- User Experience and UI Design – Creating a responsive and user-friendly interface.

- Using Components for Reusability – Making apps scalable and maintainable.

- Working with Media and Attachments – Adding images, videos, and file uploads.

- Performance Optimization Tips – Best practices to make apps faster.

- Offline Capabilities in Canvas Apps – How to work with apps when offline.

- Integrating Power Automate with Canvas Apps – Automating processes.

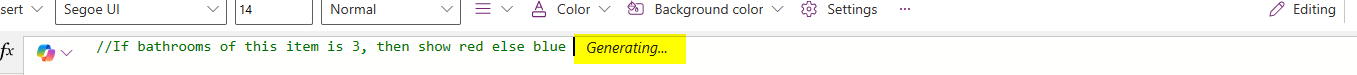

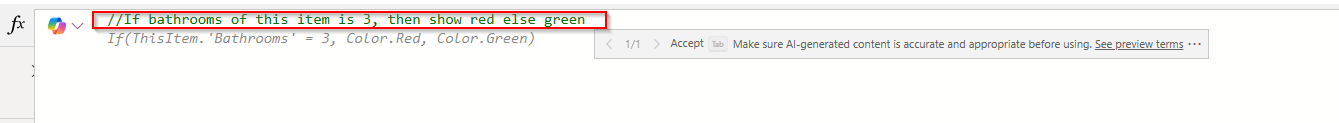

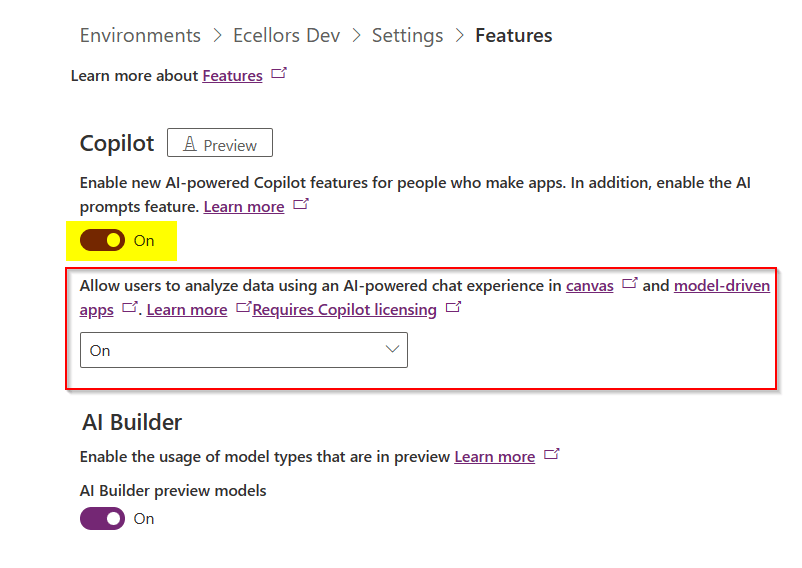

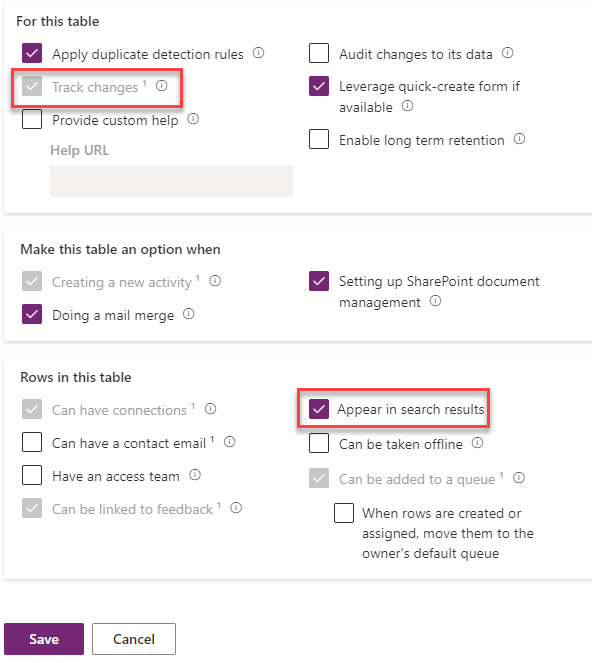

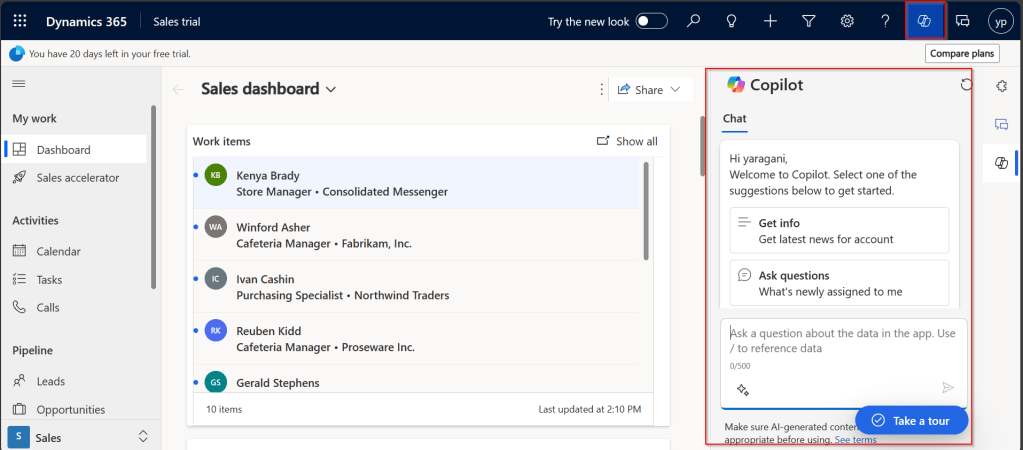

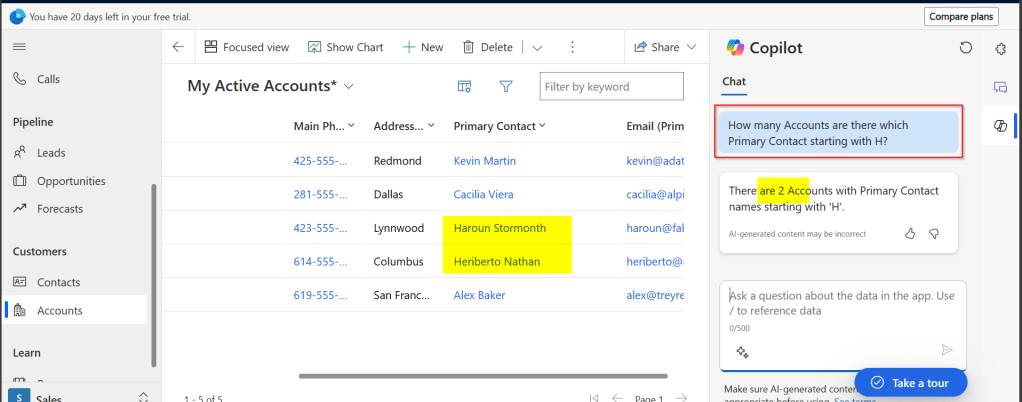

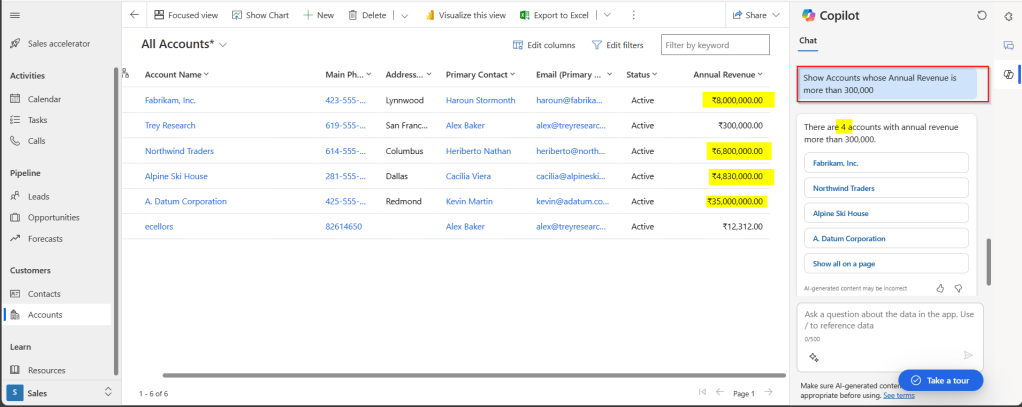

- AI and Copilot Features in Canvas Apps – Adding intelligence to apps.

- Advanced Security and Role-Based Access – Controlling user access and permissions.

- Publishing and Managing Your Canvas Apps – Deployment, versioning, and governance.

Firstly, let’s start with some simple introduction for this post…

What Are Canvas Apps?

Canvas Apps are a powerful low-code development tool within Microsoft Power Platform that allows users to build custom business applications with a drag-and-drop interface. Unlike model-driven apps, which rely on structured data models, Canvas Apps provide full control over the user interface, enabling developers and business users to design highly customized applications tailored to specific business needs.

Canvas Apps can be used to create simple applications for internal business processes or sophisticated applications with multiple screens, data interactions, and integrations with other Microsoft and third-party services. Users can design these apps using Power Apps Studio, a web-based development environment that provides a range of components, such as buttons, galleries, forms, and media controls, to create intuitive and responsive applications.

Why Are Canvas Apps Important?

Canvas Apps bring significant value to businesses and developers by providing:

- Low-Code Development – Build applications with minimal coding, making app development accessible to both developers and non-developers. Power Fx, a formula-based language, enables business logic implementation with ease.

- Customization & Flexibility – Unlike model-driven apps that follow a predefined data structure, Canvas Apps allow users to freely design screens, layouts, and controls, ensuring the app meets unique business requirements.

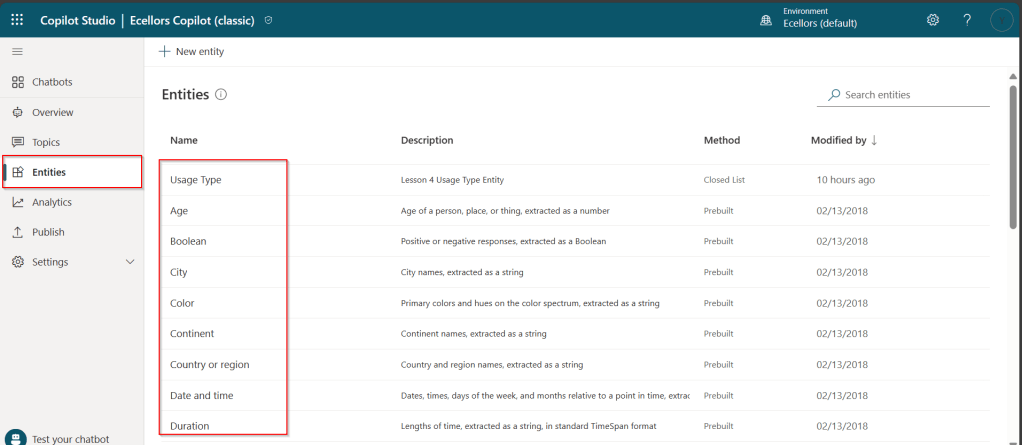

- Seamless Data Integration – Connect to over 800+ data sources, including SharePoint, Dataverse, Excel, SQL Server, and third-party APIs, ensuring seamless access to enterprise data.

- Cross-Platform Compatibility – Run apps on web browsers, mobile devices (iOS & Android), and embedded within Microsoft Teams, SharePoint, and Dynamics 365.

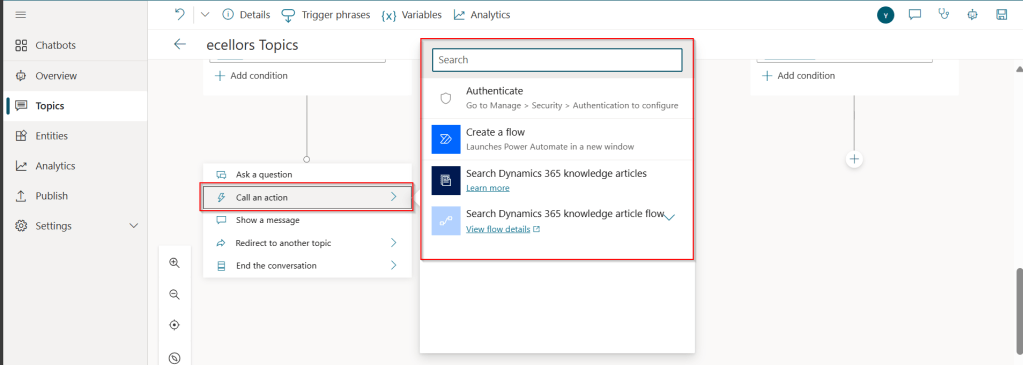

- Integration with Power Platform – Enhance apps with Power Automate for automation workflows, Power BI for data visualization, and AI Builder for AI-driven insights and intelligent automation.

- Rapid Prototyping & Deployment – With the drag-and-drop interface and prebuilt templates, businesses can quickly prototype and deploy applications without long development cycles.

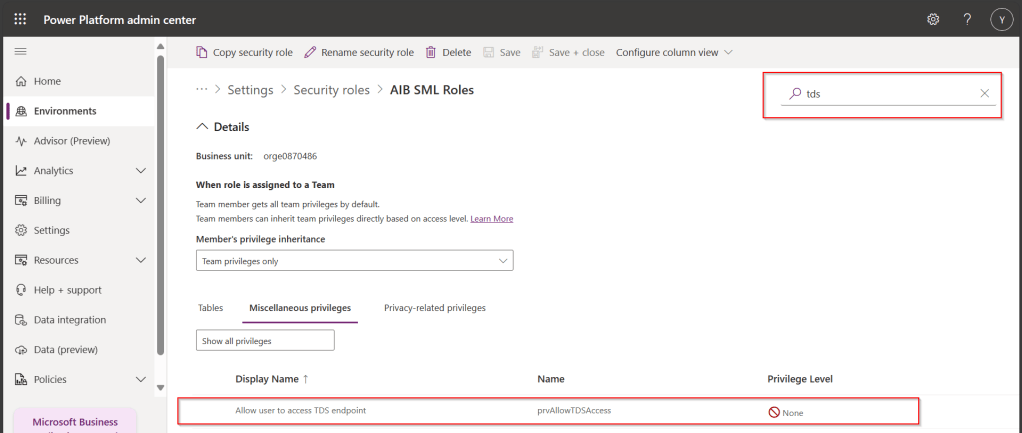

- Security & Compliance – Apps built using Canvas Apps inherit Microsoft’s security infrastructure, allowing role-based access control (RBAC) and compliance with enterprise security standards.

Real-World Use Cases

Canvas Apps can be leveraged across industries to improve efficiency and streamline operations. Some common real-world use cases include:

- Expense Management App – Employees can submit expenses with receipts, managers can approve them, and finance teams can generate reports.

- Inventory Management System – Track stock levels, reorder inventory, and generate reports in real-time.

- Incident Reporting App – Employees can report workplace incidents with photos, location, and real-time status updates.

- Customer Feedback App – Collect customer feedback through mobile-friendly forms and analyze responses with Power BI.

- Field Service Management – Field workers can access work orders, update job statuses, and capture customer signatures through mobile devices.

- HR Onboarding App – Manage the onboarding process for new employees with guided forms, policy documents, and task checklists.

Getting Started with Canvas Apps

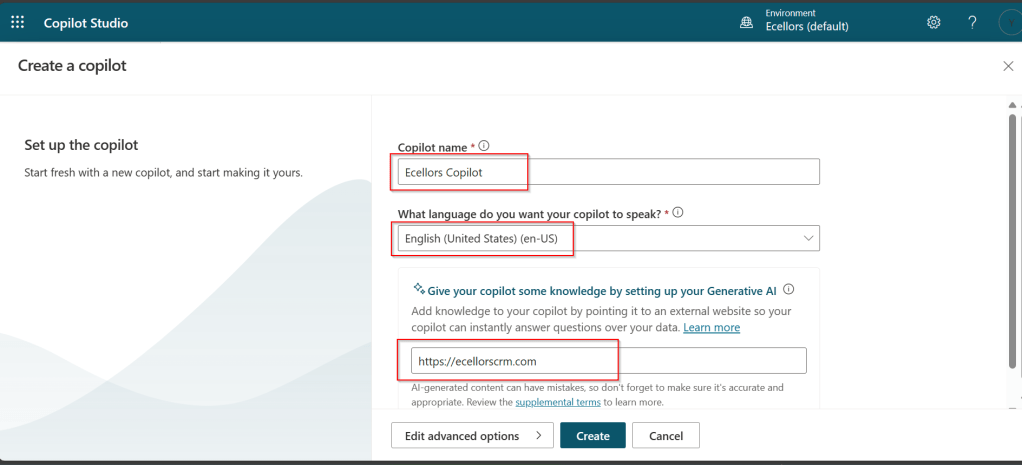

To start building a Canvas App, follow these steps:

- Sign in to Power Apps (https://make.powerapps.com)

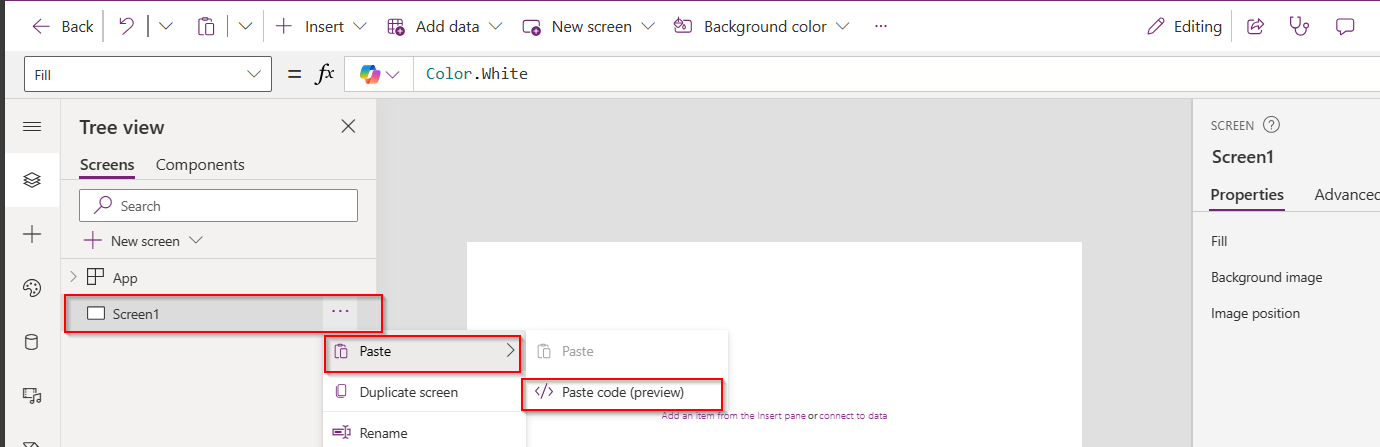

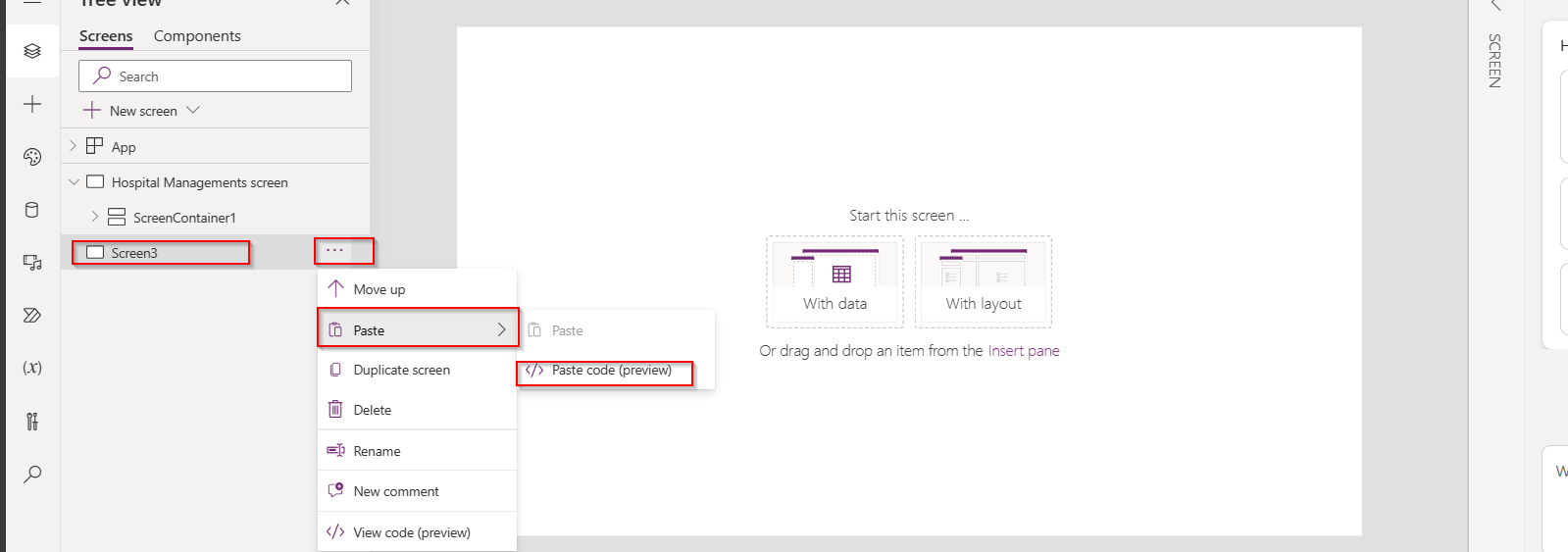

- Click on ‘Create’ and select ‘Canvas App from Blank’

- Choose a layout (Tablet or Mobile) based on your app’s intended use

- Design your app using Power Apps Studio:

- Add Screens: Home screen, forms, galleries, etc.

- Insert Controls: Buttons, text inputs, dropdowns, and images

- Connect Data Sources: Link to Dataverse, SharePoint, SQL, etc.

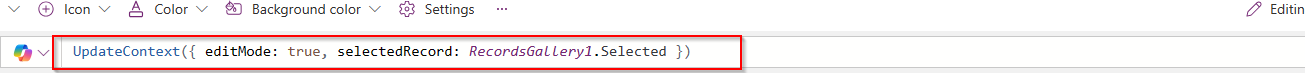

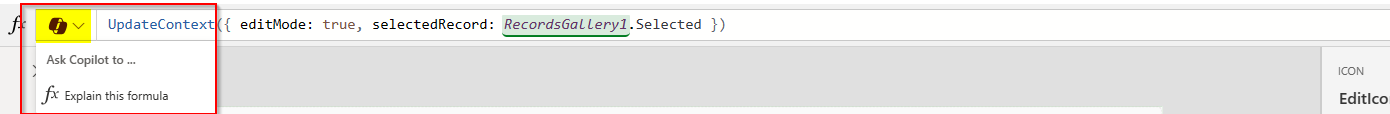

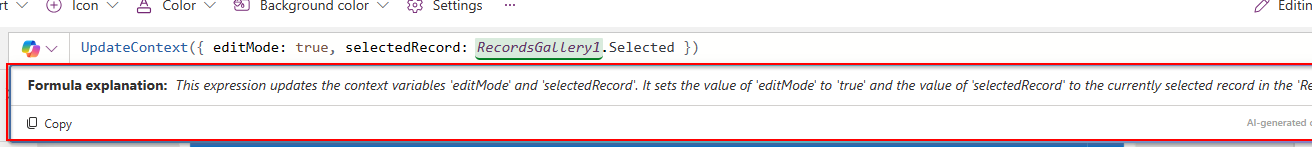

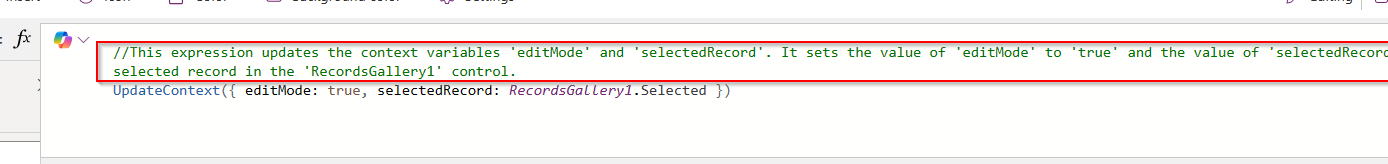

- Apply Business Logic: Use Power Fx formulas to create dynamic interactions

- Test the App: Use Preview mode to validate functionality

- Publish and Share Your App: Deploy the app and control access using Microsoft Entra ID (Azure AD)

Best Practices for Building Canvas Apps

- Plan Your App Structure – Define screens, navigation, and key functionalities before starting.

- Optimize Performance – Reduce unnecessary data calls and use delegation-friendly queries.

- Use Components for Reusability – Create custom components for commonly used UI elements.

- Ensure Responsive Design – Design layouts that work across multiple device sizes.

- Leverage Power Automate for Automation – Automate approvals, notifications, and data processing.

What’s Next?

In the next post, we’ll walk through setting up your first Canvas App from scratch, covering app layout, adding controls, and connecting to a data source.

Stay tuned! Don’t forget to follow along…

Cheers,

PMDY